Category: STEM (Science, Technology, Engineering and Mathematics)

ORIGINAL

Artificial and Deceitful Faces Detection Using Machine Learning

Detección de rostros artificiales y engañosos mediante aprendizaje automático

Balusamy Nachiappan1 *, N Rajkumar2 *, C Viji2 *, A Mohanraj3 *

1Prologis, Denver, Colorado 80202 USA.

2Department of Computer Science & Engineering, Alliance College of Engineering and Design, Alliance University, Bangalore, Karnataka, India

3Sri Eshwar College of Engineering, Coimbatore, Tamilnadu, India

Cite as: Nachiappan B, Rajkumar N, Viji C, Mohanraj A. Artificial and Deceitful Faces Detection Using Machine Learning. Salud, Ciencia y Tecnología - Serie de Conferencias 2024; 3:611. https://doi.org/10.56294/sctconf2024611

Submitted: 08-12-2023 Revised: 16-02-2024 Accepted: 10-03-2024 Published: 11-03-2024

Editor: Dr.

William Castillo-González ![]()

ABSTRACT

Security certification is becoming popular for many applications, such as significant financial transactions. PIN and password authentication is the most common method of authentication. Due to the finite length of the password, the security level is low and can be easily damaged. Adding a new dimension to the sensing mode-driven state-of-the-art multi-modal boundary face recognition system of the image-based solutions. It combines the active complex visual features extracted from the latest facial recognition model and uses a custom Convolution Neural Network issue facial authentications and extraction capabilities to ensure the safety of face recognition. The Echo function is dependent on the geometry and material of the face, not disguised by the pictures and videos, such as multi-modal design is easy to image-based face recognition system.

Keywords: Convolution Neural Network; Image Forensics; Deep learning; Generative Adversarial Network.

RESUMEN

La certificación de seguridad se está volviendo popular para muchas aplicaciones, como las transacciones financieras importantes. La autenticación con PIN y contraseña es el método de autenticación más común. Debido a la longitud finita de la contraseña, el nivel de seguridad es bajo y puede dañarse fácilmente. Agregar una nueva dimensión al sistema de reconocimiento de rostros de límites multimodal de última generación impulsado por modos de detección de las soluciones basadas en imágenes. Combina las características visuales complejas activas extraídas del último modelo de reconocimiento facial y utiliza una red neuronal convolucional personalizada para autenticaciones faciales y capacidades de extracción para garantizar la seguridad del reconocimiento facial. La función Eco depende de la geometría y el material de la cara, no está disfrazada por imágenes y videos, como el diseño multimodal que es fácil de usar en un sistema de reconocimiento facial basado en imágenes.

Palabras clave: Red Neuronal Convolucional; Imagen Forense; Aprendizaje profundo; Red Adversaria Generativa (GAN).

INTRODUCTION

The daily consumption of more than 100 million hours of video material has made fake news a danger to democracy and society. Deep neural networks have achieved impressive levels of accuracy in identifying specific sorts of image-tampering assaults, but they frequently perform poorly when dealing with covert manipulation techniques. The proposed methodology offers a deep learning system that is driven by the uncanny valley phenomena for identifying fake and fraudulent faces. To identify whether a face is real or not and predict its future state, it employs multi-task learning’s success rather than overtly simulating human brain processes.

Different from traditional strategies primarily based on handmade features, deep knowledge can use cascaded layers to analyze hierarchical representations from input data with proficiency. CNN and GAN are two innovative methods for in-depth knowledge acquisition. were significantly studied and features carried out first-rate. achievement in lots of photo-associated applications, which include photo fashion switch photo super-decision photo in portray and photo steg analysis. A superb generative version that is widely applicable in many applications is the Generative Adversarial Network (GAN). Recent research has demonstrated that this version may produce false-faced photos with excellent visual quality. These fake faces might cause serious moral, ethical, and legal issues if they are used in picture manipulation. Convolution Neural Network (CNN)-based methods are used to detect phony faces in images taken using modern, aesthetically acceptable methods. These techniques provide Evidence from experiments indicating that the suggested techniques may yield highly effective outcomes with a median precision of more than 99,4 %. To verify the proposed method’s efficacy, the comparison results were analyzed for a few variations of the proposed CNN architecture, such as the excessive skip filter, the diversity of the layer agencies, and the activation function.

Digital image forensics is a subfield of virtual forensics. The field, also known as forensic photo analysis, focuses on the authenticity and substance of photographs. This makes it possible for law enforcement to use relevant information to bring charges in a variety of illegal cases—not just those involving cybercrime anymore. Deep learning is a branch of machine learning and artificial intelligence (AI) that mimics how humans learn from trustworthy sources. Deep learning is a crucial component of facts science, which combines data with forecast modeling. Unsupervised learning uses generative adversarial networks (GANs), a powerful family of neural networks. It was built and debuted in 2014 by Ian J. Good buddy. GANs are merely a system of two neural network models that engage in rivalry and are capable of evaluating, capturing, and reproducing changes in a dataset.

Literature Review

Tensor-Flow represents computation, common country, and actions that modify that nation using dataflow graphs. Tensor-Flow Large-Scale Machine Learning on Diverse Distributed Systems is presented in this study.(1) It divides up a dataflow graph’s nodes between many processors in a cluster and a few computing devices. The framework is adaptable and may be used to describe many different deep neural network model techniques.(2)

Belhassen Bayar et al.(3) have proposed A forger can alter a photo when creating a counterfeit by using a variety of exceptional photo-enhancing techniques. Our proposed method promotes the use of a regular forensic approach to the identification of apparent tampering. This paper expands a novel convolutional layer shape meant to mask a photo’s information and adaptively investigate modification detection capabilities.

David Berthelot et al. have proposed in this paper GAN is a minimax sport in which competitors move to outwit one another. If GAN ever converges at all, it will be far more difficult than with other deep mastering models. The energy-based GAN (EBGAN) employs a different set of criteria. (4) Gang Cao et al. have proposed in this paper that Comparison enhancement is a typical retouching technique used to control the overall brightness and contrast of digital snapshots. Malicious consumers may also use domestic comparison enhancing to create a useful composite image. The efficacy and effectiveness of the suggested strategies have been confirmed by many experiments. The proposed community is built on a fully Exception-based CNN with pre-processing techniques. The grey-degree co-prevalence matrix (GLCM) of a photo containing CE fingerprints sent to CNN. High-frequency information in GLCMs under JPEG compression is extracted using the convolutional layer with restrictions.

Jiansheng Chen et al.(5) have proposed that Median filtering detection has lately drawn a great deal of interest in photograph enhancing and photograph anti-forensic techniques. Modern forensics methods for photograph median filtering, in particular, extract functions directly. This paper advocates a mean filtering detection technique primarily based totally on convolutional neural networks (CNNs).

Mo Chen et al.(6) have proposed to improve the detection accuracy of such detectors, this work incorporates JPEG-section cognizance into the structure of a convolutional neural community in this paper. The “catalyst kernel,” another innovative concept incorporated into the detector, enables the public to analyze kernels more useful for stego sign identification. Hak-Yeol Choi et al.(7) proposed in this paper in this paper the proposed approach defines 3 forms of assaults that pass off regularly at some stage in picture forging and detect while they’re simultaneously carried out to images. Since most assaults take place in a composite way in real life, the technique of the suggested approach has more realistic advantages than the conventional forensics scheme. Vincent Dumoulin et al. (8) proposed many painting techniques offer a wide range of visual language for improving photographs. The extent to which one can also observe and meticulously document this observed lexicon gauges our knowledge of the higher-level abilities of work if no longer photographs in general.

A completely unique technique for photographing the crowning splendor is provided via Edgar Simo Serra et al. And results in snap shots which might be both locally and globally solid. The use of a fully convolutional neural network can fill inside the gaps in photographs of any satisfaction. n Goodfellow et al.(10) Present a unique method for using hostile networks in generative version estimation. Instead of schooling the generative version G, which depicts the distribution of the records, this painting trains the discriminative model D, which determines the likelihood that a pattern got here from the training records. G’s schooling goals are to make it much more likely that D will make a mistake. Satoshi Iizuka et al.(12) Proposed a way to fill in any shape’s lacking sections in pictures of any resolution with the use of a total convolution neural community. It uses worldwide and local context discriminators that have been educated to differentiate between real photos and completed photos to train our picture completion community to be consistent.

Alarifi et al.(13) check out methods for classifying 3 specific forms of facial pores and skin patches, which include regular, spots, and wrinkles, the use of traditional device getting to know, and present-day convolutional neural networks. With an accuracy of zero.899, F-measure of 0.852, and Matthews Correlation Coefficient of zero.779, Google Internet beats the help Vector gadget technique. Selvaraju et al.(14) Proposed Gradient-weighted Class Activation Mapping (Grad-CAM) is a method for creating visible motives for alternatives from CNN-primarily based models that outperform advanced strategies and aid in version generalization. It gives insights into failure modes. Zhou et al. (15) The worldwide common pooling layer is reviewed in this work, and its miles are explained.

The way it permits the convolutional neural community (CNN) to have fantastic localization capacity notwithstanding being trained on photo-level labels. It creates a popular, localizable deep representation that makes the CNNs’ hidden awareness of an image visible. Without the usage of bounding container annotation education data, our network executed 37,1 % pinnacle-five errors for item localization on ILSVRC 2014.

METHOD

The type of features that can be derived from eye-tracking data when users guess the fake face of a person’s face can be better understood by statistically analyzing the tracking results. Moreover, models may help to enhance the findings of this investigation. The most recent research indicates that local traits on the facial face are crucial for estimating fake faces, especially when used in conjunction with global face features. The accuracy of automated fake face detection may therefore be further improved by properly constructing CNN to incorporate these regions into their predictions.

Face recognition module

The Module provides an Appearance and texture-based method to create realistic facial expressions in fake faces, which are difficult to imitate artificially due to differences in appearance, skin, color, and gender.

Face Detection

Twelve block searching windows are used by the automatic fake face estimation method to locate multi-scale facial candidates during fake face capture. After its brightness has been adjusted, it can be identified more precisely.

Pre-Processing

Preprocessing improves recognition rate by reducing noise, illumination, color intensity, background, and orientation.

Feature Extraction

Gabor wavelets are used in the fake facial feature extractor because of their biological relevance and computational capabilities.

Fake face detection

CNN is used to train fake face estimators, with each face group having its own model. The study of fake or altered facial images is the primary goal of the computer vision subfield known as fake face detection. To fight fraud and malicious activities, there is an increasing need for robust and trustworthy fake face detection methods with the emergence of Deepfakes and other sophisticated methods for creating fake face images.

Edge detector

Edge detectors can be used to detect borders in fake faces, but some do not have a good performance due to soft transitions in edges. Robinson’s algorithm uses a convolutional matrix to detect maximum transitions in different directions.

It has been demonstrated that CNN-based techniques are useful for identifying fake and fraudulent faces.

Figure 1. System Flow Diagram

Here is a high-level description of a potential CNN-based technique for identifying real from fake facial images:

· Data Collection: obtain a dataset of real facial images and a dataset of fake face images. Several methods, including Deepfakes, GANs, or facial morphing, can be used to create fake face pictures.

· Preprocessing: preprocessing entails putting raw input data into a format that is appropriate for machine learning algorithms to analyze. The pictures are pre-processed by having the pixel values normalized, being resized to a standard size, and being made grayscale.

· Training: utilize the pre-processed pictures to train a CNN model. Multiple convolutional layers, pooling layers, and completely connected layers should all be present in the CNN model. The CNN model needs to understand the distinguishing characteristics between real and fake facial images.

· Validation: Use a validation collection to evaluate the performance of the CNN model. It uses a variety of assessment metrics, such as accuracy, precision, recall, and F1-score, to rate the success of the model.

· Testing: on a test collection, evaluate the CNN model’s performance. To guarantee that the model can generalize to new data, the testing collection should differ from the training and validation sets.

· Deployment: Deploy the trained CNN model in a practical application, such as a fraud detection or face recognition system.

By learning the key characteristics that separate real and fake facial images, CNN-based methods can, in general, be successful in identifying fake and fraudulent faces. It’s crucial to remember that this approach might not be perfect and might not be able to identify all fake facial images. To guarantee the security and precision of facial recognition systems, it is vital to keep developing and improving upon these techniques.

EXPERIMENTAL SETUP AND RESULT ANALYSIS

To demonstrate the efficacy of the suggested strategy, our proposal presents the photo statistics set and a few tests in this section. The proposed method uses 30,000 real-face photos from the CELEBAHQ dataset in our research. Then, from each image in the special photo collection, it uses crop a small (128128) area. it initially describes the photo statistics set used in our research in this section. Then, to demonstrate the effectiveness of the suggested method for spotting phony face images, it provides a few tests. It also does several tests to show the rationality of the proposed approach. Image Data Set In our study, the proposed work combines 30,000 real faces from the CELEBAHQ data set with 30,000 fake faces from AND Fake Face Identification’s fake face image database 1. The purpose of this section is to determine whether a specific facial image is real or fake. based on the data in the cyan container. It found that a few fake face photographs’ heritage regions appear out of position, which may improve the detection effectiveness. it cut a tiny piece (128 128) from each picture in the individual image set (256 256) to decrease the influence of image backdrops, making sure that each cropped area specifically comprises a few key factors (such as eyes, noses, and mustaches), as illustrated in the red container. Finally, it obtains special picture facts for experiments, which are distinct ones including face and ancestry, initially describe the photo statistics set used in our research in this section. Then, it offers a few tests to show how the proposed technique for spotting false face photographs works. it also ran a ton of tests to demonstrate the proposed paradigm’s logic. Photo Data Set 30,000 real faces from the CELEBAHQ dataset and 30,000 fake faces from the fake face picture database 1 created by AND Fake Face Identification are both used in our study.

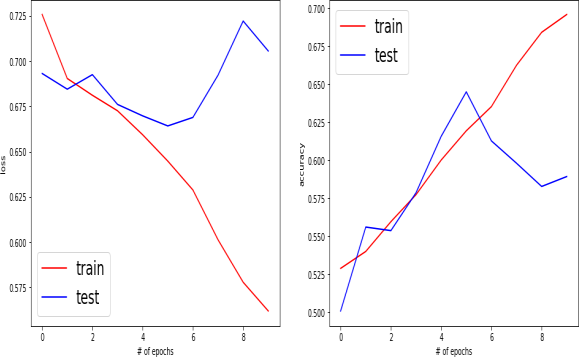

This section’s goal is to determine whether a certain face picture is authentic or phony. The proposed method found that some historical characteristics in a few fake face photos seem out of the ordinary, as shown inside the blue container, which may help to increase the efficacy of the detection. To lessen the impact of image backdrops, it crops a small portion (128 128) from each image in the individual image set (256 256), making sure that each cropped section only contains a few key facial features (such as the eyes, nose, and mustache), as shown in the red container in figure 2. Finally, it gets precise photo information for tests as shown in the below graph, including original pictures that include the face and heritage and cropped pictures that just provide the main facial features.

Figure 2. Train and Test

Figure 2, visualization of the connection between the performance of a gadget gaining knowledge of the model on its education records and its testing information. This shows that the version can be overfitting the schooling facts. Overfitting is a phenomenon where a model plays thoroughly on the training statistics however poorly on unseen records. Right here are some viable reasons why a version would possibly overfit: The model is too complicated for the amount of statistics to be had. More than 300 pictures from the genuine and false instances from the Test Set-III of FFW were randomly picked, and the embedding spaces intended for the HAMN and MesoInception-4 were shown in two dimensions. The NTM model exhibits the weakest performance among the memory architectures, underscoring the weakness of single-level attention in the memory retrieval process. The addition of a tree-structured memory yields a minor speed improvement, but because various embeddings mix semantic information, this approach is unable to successfully learn to distinguish between false and real pictures. However, when measured in terms of APCER, the performance loss between observed and unseen attacks is relatively small when compared to CNN-based systems like GoogleLetNet/InceptionV3 and the well-known MesoNet-4 and MesoInception-4. This suggests that long-term dependency modeling of memory networks improves performance. The proposed HAMN method was successful in differentiating real from fake examples, developing theories about the witnessed faces in terms of their appearance and anticipated behavior, and learning the fundamentals of actual human faces. Depending on the task, different parameters may be used to define the performance of a work, but some typical parameters used to assess the performance of models include:

Accuracy: The percentage of instances that were properly classified out of all the instances.

Precision: True positive rate, or the proportion of positive events that were correctly recognized as positive, to all positive predictions (both true and false positives).

Remember: The ratio of real positive results to true positive results (both true positives and false negatives). The harmonic mean of recall and precision, which strikes a compromise between the two metrics, is the F1 score.

CONCLUSION

In this paper, our first suggestion is a CNN-based method for expressing the difference between authentic and fake face images made using the most current technique. The results of our extensive experiments are then presented to demonstrate that the suggested technique can accurately and with high visibility best distinguish between real and fake face images. Our experimental findings also show that, despite the ability of current GAN-based methods to generate realistic-appearing faces (or other picture elements and scenes), some glaring statistical artifacts may inevitably be added and may even be used as evidence for fake ones. A better generative model called generative antagonistic networks (GAN) creates unique samples by inferring the distribution from large amounts of measurement data. Usually, a discriminator and a generator make up a GAN. The discriminator learns to determine whether the entered statistics are true or false, while the generator learns to produce fake statistics that are identical to the real ones. At some time during their education, they compete against one another until the generator can produce the most convincing fake statistics.

BIBLIOGRAPHIC REFERENCES

1. Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, et al. TensorFlow: Large-scale gadget getting to know on heterogeneous disbursed systems. arXiv preprintarXiv:1603.04467 (2016).

2. Bayar B, Stamm MC. A deep getting-to-know technique to accepted picture manipulation detection the usage of a brand new convolutional layer. In Proceedings of the 4th ACM Workshop on Information Hiding and Multimedia Security. 2016:5–10.

3. Berthelot D, Schumm T, Metz L. Began: Boundary equilibrium generative adverse networks. arXiv preprint arXiv:1703.10717 (2017).

4. Cao G, Zhao Y, Ni R, Li X. Contrast enhancement-based forensics in virtual images. IEEE transactions on records forensics and protection. 2014;9(3):515–525.

5. Chen J, Kang X, Liu Y, Wang ZJ. Median filtering forensics primarily based totally on convolutional neural networks. IEEE Signal Processing Letters. 2015;22(11):1849–1853.

6. Chen M, Sedighi V, Boroumand M, Fridrich J. JPEGPhase-Aware Convolutional Neural Network for Steganalysis of JPEG Images. In ACM Workshop on Information Hiding and Multimedia Security. 2017:75–84.

7. Choi HY, Jang HU, Kim D, Son J, Mun SM, Choi S, et al. Detecting composite image manipulation based on deep neural networks. In IEEE International Conference on Systems, Signals and Image Processing.

8. Alarcon JCM. Information security: A comprehensive approach to risk management in the digital world. SCT Proceedings in Interdisciplinary Insights and Innovations 2023;1:84-84. https://doi.org/10.56294/piii202384.

9. Alarifi JS, Goyal M, Davison AK, Dancey D, Khan R, Yap MH. Facial skin classification using convolutional neural networks. In International Conference Image Analysis and Recognition. Springer. 2017:479–485.

10. Anandakumar H, Arulmurugan R. Early Detection of Lung Cancer using Wavelet using Neural Networks Classifier. In Computational Vision and Bio Inspired Computing, Lecture Notes in Computational Vision and Biomechanics, Springer Book Series, Volume No – 28, Chapter No – 09. 2017. ISBN: 978-3-319-71766-1.

11. Auza-Santiváñez JC, Díaz JAC, Cruz OAV, Robles-Nina SM, Escalante CS, Huanca BA. Bibliometric Analysis of the Worldwide Scholarly Output on Artificial Intelligence in Scopus. Gamification and Augmented Reality 2023;1:11-11. https://doi.org/10.56294/gr202311.

12. Auza-Santivañez JC, Lopez-Quispe AG, Carías A, Huanca BA, Remón AS, Condo-Gutierrez AR, et al. Improvements in functionality and quality of life after aquatic therapy in stroke survivors. AG Salud 2023;1:15-15. https://doi.org/10.62486/agsalud202315.

13. Barrios CJC, Hereñú MP, Francisco SM. Augmented reality for surgical skills training, update on the topic. Gamification and Augmented Reality 2023;1:8-8. https://doi.org/10.56294/gr20238.

14. Batista-Mariño Y, Gutiérrez-Cristo HG, Díaz-Vidal M, Peña-Marrero Y, Mulet-Labrada S, Díaz LE-R. Behavior of stomatological emergencies of dental origin. Mario Pozo Ochoa Stomatology Clinic. 2022-2023. AG Odontologia 2023;1:6-6. https://doi.org/10.62486/agodonto20236.

15. Cano CAG, Castillo VS. Systematic review on Augmented Reality in health education. Gamification and Augmented Reality 2023;1:28-28. https://doi.org/10.56294/gr202328.

16. Cardenas DC. Health and Safety at Work: Importance of the Ergonomic Workplace. SCT Proceedings in Interdisciplinary Insights and Innovations 2023;1:83-83. https://doi.org/10.56294/piii202383.

17. Castillo-González W. Kinesthetic treatment on stiffness, quality of life and functional independence in patients with rheumatoid arthritis. AG Salud 2023;1:20-20. https://doi.org/10.62486/agsalud202320.

18. Cuervo MED. Exclusive breastfeeding. Factors that influence its abandonment. AG Multidisciplinar 2023;1:6-6. https://doi.org/10.62486/agmu20236.

19. Diaz DPM. Staff turnover in companies. AG Managment 2023;1:16-16. https://doi.org/10.62486/agma202316.

20. Dionicio RJA, Serna YPO, Claudio BAM, Ruiz JAZ. Sales processes of the consultants of a company in the bakery industry. Southern Perspective / Perspectiva Austral 2023;1:2-2. https://doi.org/10.56294/pa20232.

21. Dumoulin V, Shlens J, Kudlur M. A learned representation for artistic style. In Proceedings of International Conference on Learning Representations. 2017.

22. Figueredo-Rigores A, Blanco-Romero L, Llevat-Romero D. Systemic view of periodontal diseases. AG Odontologia 2023;1:14-14. https://doi.org/10.62486/agodonto202314.

23. Frank M, Ricci E. Education for sustainability: Transforming school curricula. Southern Perspective / Perspectiva Austral 2023;1:3-3. https://doi.org/10.56294/pa20233.

24. Gómez LVB, Guevara DAN. Analysis of the difference of the legally relevant facts of the indicator facts. AG Multidisciplinar 2023;1:17-17. https://doi.org/10.62486/agmu202317.

25. Gonzalez-Argote D, Gonzalez-Argote J, Machuca-Contreras F. Blockchain in the health sector: a systematic literature review of success cases. Gamification and Augmented Reality 2023;1:6-6. https://doi.org/10.56294/gr20236.

26. Gonzalez-Argote J, Castillo-González W. Productivity and Impact of the Scientific Production on Human-Computer Interaction in Scopus from 2018 to 2022. AG Multidisciplinar 2023;1:10-10. https://doi.org/10.62486/agmu202310.

27. Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, et al. Generative adversarial nets. In Advances in neural information processing systems. 2014:2672–2680.

28. Herera LMZ. Consequences of global warming. SCT Proceedings in Interdisciplinary Insights and Innovations 2023;1:74-74. https://doi.org/10.56294/piii202374.

29. Iizuka S, Simo-Serra E, Ishikawa H. Globally and locally consistent image completion. ACM Transactions on Graphics. 2017;36(4):107:1–107:1.

30. Ledesma-Céspedes N, Leyva-Samue L, Barrios-Ledesma L. Use of radiographs in endodontic treatments in pregnant women. AG Odontologia 2023;1:3-3. https://doi.org/10.62486/agodonto20233.

31. Lopez ACA. Contributions of John Calvin to education. A systematic review. AG Multidisciplinar 2023;1:11-11. https://doi.org/10.62486/agmu202311.

32. Marcelo KVG, Claudio BAM, Ruiz JAZ. Impact of Work Motivation on service advisors of a public institution in North Lima. Southern Perspective / Perspectiva Austral 2023;1:11-11. https://doi.org/10.56294/pa202311.

33. Millán YA, Montano-Silva RM, Ruiz-Salazar R. Epidemiology of oral cancer. AG Odontologia 2023;1:17-17. https://doi.org/10.62486/agodonto202317.

34. Mosquera ASB, Román-Mireles A, Rodríguez-Álvarez AM, Mora CC, Esmeraldas E del CO, Barrios BSV, et al. Science as a bridge to scientific knowledge: literature review. AG Multidisciplinar 2023;1:20-20. https://doi.org/10.62486/agmu202320.

35. Niranjani V, Selvam NS. Overview on Deep Neural Networks: Architecture, Application and Rising Analysis Trends. In EAI/Springer Innovations in Communication and Computing. 2020:271–278.

36. Ojeda EKE. Emotional Salary. SCT Proceedings in Interdisciplinary Insights and Innovations 2023;1:73-73. https://doi.org/10.56294/piii202373.

37. Olguín-Martínez CM, Rivera RIB, Perez RLR, Guzmán JRV, Romero-Carazas R, Suárez NR, et al. Bibliometric analysis of occupational health in civil construction works. AG Salud 2023;1:10-10. https://doi.org/10.62486/agsalud202310.

38. Osorio CA, Londoño CÁ. El dictamen pericial en la jurisdicción contenciosa administrativa de conformidad con la ley 2080 de 2021. Southern Perspective / Perspectiva Austral 2024;2:22-22. https://doi.org/10.56294/pa202422.

39. Polo LFB. Effects of stress on employees. AG Salud 2023;1:31-31. https://doi.org/10.62486/agsalud202331.

40. Pupo-Martínez Y, Dalmau-Ramírez E, Meriño-Collazo L, Céspedes-Proenza I, Cruz-Sánchez A, Blanco-Romero L. Occlusal changes in primary dentition after treatment of dental interferences. AG Odontologia 2023;1:10-10. https://doi.org/10.62486/agodonto202310.

41. Ramos YAV. Little Attention of Companies in the Commercial Sector Regarding the Implementation of Safety and Health at Work in Colombia During the Year 2015 to 2020. SCT Proceedings in Interdisciplinary Insights and Innovations 2023;1:79-79. https://doi.org/10.56294/piii202379.

42. Roa BAV, Ortiz MAC, Cano CAG. Analysis of the simple tax regime in Colombia, case of night traders in the city of Florencia, Caquetá. AG Managment 2023;1:14-14. https://doi.org/10.62486/agma202314.

43. Rodríguez LPM, Sánchez PAS. Social appropriation of knowledge applying the knowledge management methodology. Case study: San Miguel de Sema, Boyacá. AG Managment 2023;1:13-13. https://doi.org/10.62486/agma202313.

44. Romero-Carazas R. Prompt lawyer: a challenge in the face of the integration of artificial intelligence and law. Gamification and Augmented Reality 2023;1:7-7. https://doi.org/10.56294/gr20237.

45. Saavedra MOR. Revaluation of Property, Plant and Equipment under the criteria of IAS 16: Property, Plant and Equipment. AG Managment 2023;1:11-11. https://doi.org/10.62486/agma202311.

46. Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D, et al. Grad-cam: Visual explanations from deep networks via gradient-based localization. In ICCV. 2017:618–626.

47. Solano AVC, Arboleda LDC, García CCC, Dominguez CDC. Benefits of artificial intelligence in companies. AG Managment 2023;1:17-17. https://doi.org/10.62486/agma202317.

48. Valdés IYM, Valdés LC, Fuentes SS. Professional development, professionalization and successful professional performance of the Bachelor of Optometry and Opticianry. AG Salud 2023;1:7-7. https://doi.org/10.62486/agsalud20237.

49. Velásquez AA, Gómez JAY, Claudio BAM, Ruiz JAZ. Soft skills and the labor market insertion of students in the last cycles of administration at a university in northern Lima. Southern Perspective / Perspectiva Austral 2024;2:21-21. https://doi.org/10.56294/pa202421.

50. Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A. Learning deep features for discriminative localization. In Computer Vision and Pattern Recognition (CVPR), 2016 IEEE Conference on. IEEE. 2016:2921–2929.

FINANCING

No financing.

CONFLICT OF INTEREST

None.

AUTHORSHIP CONTRIBUTION

Conceptualization: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Data curation: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Formal analysis: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Acquisition of funds: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Research: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Methodology: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Project management: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Resources: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Software: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Supervision: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Validation: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Visualization: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Writing - original draft: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.

Writing - proofreading and editing: Balusamy Nachiappan, N Rajkumar, C Viji, A Mohanraj.