doi: 10.56294/sctconf2024.1119

Category: STEM (Science, Technology, Engineering and Mathematics)

ORIGINAL

Radar Based Secure Contactless Fall Detection Using Hybrid Optimizer with Convolution Neural Network

Detección Segura de Caídas sin Contacto Basada en Radar Utilizando un Optimizador Híbrido con Red Neuronal Convolucional

Nester Jeyakumar M1 ![]() *, Jasmine Samraj2

*, Jasmine Samraj2 ![]() *, Bennet Rajesh M3

*, Bennet Rajesh M3

![]() *

*

1Assistant Professor, Department of Computer Science, Loyola College. Chennai 600034, India.

2Rtd. Associate Professor, PG Research Department of Computer Science, QUAID-E-MILLATH Government College for Women (Autonomous). Chennai 600002, India.

3Assistant Professor, Department of Computer Science, Government Arts and Science College. Nagercoil-629004, India.

Cite as: Jeyakumar M N, Samraj J, Rajesh M B. Radar Based Secure Contactless Fall Detection Using Hybrid Optimizer with Convolution Neural Network. Salud, Ciencia y Tecnología - Serie de Conferencias. 2024; 3:.1119. https://doi.org/10.56294/sctconf2024.1119

Submitted: 20-02-2024 Revised: 05-05-2024 Accepted: 02-09-2024 Published: 03-09-2024

Editor:

Dr.

William Castillo-González ![]()

Corresponding Author: Nester Jeyakumar M *

ABSTRACT

Introduction: senior citizens can lead to severe injuries. Existing wearable fall-alert sensors are often ineffective as seniors tend to avoid using them, highlighting the need for non-contact sensor applications in smart homes. This study proposes a CNN-based fall detection system using time-frequency analyses. A unique hybrid optimizer, GWO-ABC, combining Artificial Bee Colony (ABC) and Grey Wolf Optimizer (GWO), is employed to optimize CNN architectures. Radar return signals are transformed into spectrograms and binary images for training the HOCNN with fall and non-fall data.

Method: radar signals are processed using short-time Fourier transformation to create time-frequency spectrograms, converted into binary images. These images are fed into a CNN optimized by the GWO-ABC algorithm. The CNN is trained on labelled fall and non-fall instances, focusing on high-level feature extraction.

Results: the HOCNN showed superior accuracy in fall detection, successfully extracting critical high-level features from radar signals. Performance metrics, including precision, recall, and F1-score, demonstrated significant improvements over traditional methods.

Conclusions: this study introduces a non-contact, automatic fall detection system for smart homes using GWO-ABC optimized CNNs, offering a promising solution for enhancing geriatric care and ensuring senior citizen safety. Index Terms—Grey Wolf Optimizer, Artificial Bee Colony algorithm, Convolutional neural network, fall detection, time-frequency analysis, ultra-wideband (UWB) radar.

Keywords: Grey Wolf Optimizer; Artificial Bee Colony Algorithm; Convolutional Neural Network; Fall Detection; Time-Frequency Analysis; Ultra-Wideband (UWB) Radar.

RESUMEN

Introducción: las personas mayores pueden sufrir lesiones graves. Los sensores portátiles de alerta de caídas existentes suelen ser ineficaces ya que las personas mayores tienden a evitar su uso, lo que pone de relieve la necesidad de aplicaciones de sensores sin contacto en los hogares inteligentes. Este estudio propone un sistema de detección de caídas basado en CNN que utiliza análisis de tiempo-frecuencia. Se emplea un optimizador híbrido único, GWO-ABC, que combina Artificial Bee Colony (ABC) y Gray Wolf Optimizer (GWO), para optimizar las arquitecturas de CNN. Las señales de retorno del radar se transforman en espectrogramas e imágenes binarias para entrenar el HOCNN con datos de caída y sin caída.

Método: las señales de radar se procesan mediante la transformación de Fourier de corto tiempo para crear espectrogramas de tiempo-frecuencia, convertidos en imágenes binarias. Estas imágenes se introducen en una CNN optimizada por el algoritmo GWO-ABC. La CNN está entrenada en instancias etiquetadas de caída y no caída, enfocándose en la extracción de características de alto nivel.

Resultados: el HOCNN mostró una precisión superior en la detección de caídas, extrayendo con éxito características críticas de alto nivel de las señales de radar. Las métricas de rendimiento, incluidas la precisión, la recuperación y la puntuación F1, demostraron mejoras significativas con respecto a los métodos tradicionales.

Conclusiones: este estudio presenta un sistema automático de detección de caídas sin contacto para hogares inteligentes que utiliza CNN optimizadas por GWO-ABC, que ofrece una solución prometedora para mejorar la atención geriátrica y garantizar la seguridad de las personas mayores. Términos del índice: optimizador de lobo gris, algoritmo de colonia de abejas artificial, Red neuronal convolucional, detección de caídas, análisis tiempo-frecuencia, radar de banda ultraancha (UWB).

Palabras clave: Optimizador de Lobo Gris; Algoritmo de Colonia de Abejas Artificiales; Red Neuronal Convolucional; Detección de Caídas; Análisis de Tiempo-Frecuencia; Radar de Banda Ultraancha (UWB).

INTRODUCTION

In mid-2019, there were around 7,7 billion people on the planet. This number is steadily growing.(1) In the next 30 years, the proportion of old individuals will rapidly increase, accounting to population’s 11,1 %–18,6 %(2) as healthcare improves with birth controls. Hence, the proportion of working seniors is quickly declining. This will result in a global workforce shortage in a variety of fields, including senior care. European commission’s Telecare consortium project was initiated to take care of the elderly.(3) Numerous other nations in different regions also started similar ventures.

Most elderly people stay in bedrooms or living rooms or bathrooms, especially when they are at home, and it is considered that they desire seclusion at this time.(4) Elderly people need rapid help after falling since they are more likely to have a fatal fall.(5) In order to continually monitor elderly people in rooms without the employment of caretakers, automatic fall detection and alarm systems must be created within circumstances of privacy and trust.

Currently, costly wearable sensors like accelerometers are used to detect activity.(6) Radar-based noncontact interior monitoring is becoming more popular as smart homes take off because radar signals may pass through barriers including walls to identify targets.(7) Additionally, radar-based techniques eliminate the requirement for carrying a sensor(8) and do not infringe the monitored persons’ right to privacy.(9) UWB (Ultra-wideband) radars are growing in popularity as elements of active monitoring as they quickly identify falls based on sensors and better than radars based on continuous wave. They are resistant to multipath fades, stronger penetrations and finer temporal/spatial resolutions.(10) To detect falls, radar-based monitoring techniques can utilise thresholds(11) or learning based approaches.(12) Effective characteristics or descriptors are needed for threshold-based fall detection, and when these descriptors go over predetermined thresholds during a fall, an alert is set off. Models need to be trained with time/frequency-based features for learning-based techniques.

Radar’s micro-Doppler data were used to detect human activities(12) based on time and cadence velocity traits. Time frequency characteristics of radar’s Doppler signals were used by Wu et al.(13) to identify falls, and events classified using sparse Bayesian classifiers based on three attributes’ statistical information. All of these techniques required the use of human feature engineers, and the accuracy of the categorization was reliant on the designed features. DLTs (Deep learning techniques) may be utilized in place of created features to automatically extract features for fall detection.(14) Jokanovic et al.(15) used stacked auto encoders for extracting required features from spectrogram grayscale images while deep neural network (DNN) detected falls. To categorise different types of activities, including falls, SoftMax regression was utilised. Wagner et al.(16) explored the use of transfer learning for categorising activities with the use of wearable sensor data. For feature extraction from spectrogram pictures, the pre-trained Alex Net was employed, and then linear/nonlinear SVMs (support vector machines) classified samples. Lang et al.(17) explored that representations of colour and grey scale time frequencies were fed into CNN for classifying simulated micro-Doppler signals of humans. All of the earlier studies mentioned above extracted features from objects specifically. None of them discussed the use of automated features based on form for radar-based fall detections of distributions of energy in joint time frequency domains.

This research work detects human falls based on energies from activities and examining their related binary image representations from accompanying spectrograms. The binary pictures are enhanced with morphological operations and processed by DCNN (deep CNN) which extract features automatically. The proposed schema is evaluated using other MLTs (machine learning techniques) including DTs (decision trees), KNNs (k-nearest neighbours), and SVMs. The same input utilised for the CNN, which was optimised using the hybrid GWO-ABC algorithm in the proposed technique, is supplied to these algorithms.

The rest of this article is organized as follows. The current UWB radar systems utilised for fall detection are explained in Section II. The suggested fall detection approach, which includes selections of ranges, analysing temporal frequencies, production of binary images, data augmentations, and automated feature extractions, are discussed in Section III. Experimental findings, existing difficulties, and prospective possibilities for falls based on radars are discussed in Section IV. Section V completes this article’s conclusion.

Related Works

Three UWB radars were utilised by Maître et al.(18) to detect falls in realistic circumstances with accuracy values of 0,85 for 3 radars and 0,87 for single radar configurations and correspondingly Cohen’s kappa values for the same configurations were 0,70 and 0,76 in leave-one-out validations. However, with radars at point 3, mean accuracies and for individual radar detections, Cohen’s kappa values were 0,68 and 0,83, respectively. Considering data from closest radars for categorizations, Cohen’s kappa values for first, second and third potions were 0,95, 0,91, and 0,87, implying position dependency of fall identification while using UWB radars and inferring ways of compensating multi-radar systems to handle these drawbacks. But since there were only ten volunteers in each sample, the results were dubious.

Using a dual-radar system, Saho et al.(19) assisted fall detections in bathrooms using Doppler radars which were fixed on bathrooms’ ceilings and on walls to the front and back of volunteers. The participants performed eight different movements, such as rising from a seated position and falling forward. The study’s usage of short Fourier transforms and CNN for ceiling radars, accuracy values for wall radars and dual-radar systems were found to be 0,90, 0,92, and 0,96, respectively. The study concluded that the usage of two radars could extract greater precisions by examining differences between falls and other movements which could be detected in parallel for both in upward and horizontal directions. Sensitivity, specificity values for single or dual radar systems were 1,00 implying fall recognitions utilising fewer radars could assist in qualitative classification performances while reducing costs.

Yang et al.(20) examined positioning of singular and bi-radars. Micro-Doppler signatures generated from raw data were classified using SVMs while Fourier transforms were utilized for extracting features. Three radars were employed; the first was installed at 228 cm (height) on the ceiling with 20° downward slopes while the next was fixed at a height of 100 cm on tables, and the third ones were placed on the ground. The authors draw the conclusion that the radar signature has the most distinctive features when put on the floor based on the findings obtained. However, there will be an occlusion issue in a genuine, complicated interior scene. In order to capture appropriate directions of falling motions for single sensors, broad beam radars need to be set on the ceiling in a vertically down direction. It is important for the second radar to be in a lower position without interfering vision fields of first radar when there are two sensors.

Mager et al.(21) proved efficacies of multi-sensor RFs (radio frequencies) by their usage of 24 node network RFs were dispersed across in a room 17 cm above the floor (lower level) and 140 cm above the floor (higher level implying human torso’s upper part). Human vertical locations and motions could be traced inside networks by analysing attenuations of radio tomographic images of layers and computing current postures (standing, mid-positions, or lying down). Time differences between standing and lying postures were then used to detect falls. However, such a system is highly costly because of the high number of sensors.

Cameiro et al.(22) used multiple streams for fall identifications using handcrafted feature extractions (optical flows, RGB values). Subsequently, CNNs were adapted for classifying RGB videos, estimated locations and postures of humans, Optical flows which extracted feature vectors and categorized scenarios with fall detection using VGG-16 layered classifier. The models were trained using the URFD and the FDD datasets. 98,77 % accuracy was achieved by the designed system utilising fivefold shuffled cross-validations.

Casilari et al.(23) suggested detections of falls using DCNN. This schema was tested on fourteen datasets where aspects of falls were examined, including MobiAct, SisFall, MobiFall, UniMiB SHAR, and UP-Fall. It recognized tri-axial transportable accelerometer signals to identify human falls. The study achieved good levels of accuracy (99,22 %), sensitivity (98,64 %), and specificity (99,63 %) on the Sisal dataset.

METHOD

The suggested fall detection approach is provided in this part and is based on automated feature extraction using HOCNN and representations of time frequencies from radar return signals. This work’s suggested fall detection technique based on radars is shown in figure 1.

Figure 1. Proposed Block Diagram

Experimental Input Setup

The Novelda (Oslo, Norway) Xethru X4M03 development kit was the radar employed in this experiment.(24) This radar uses patch antennas with 65 apertures in both axes (azimuth and elevations), along with UWB transceivers operating in 5,9–10,3 GHz band ranges. The inexpensive price, compact design, and superior spatial resolution of this specific radar led to its selection. The University of Ottawa conducted the trials in two real-world room settings. Both rooms had the following measurements and were cluttered: Postures in one of the room contexts, as shown in 12,64.1 and figure 1. (a) Previous. (b) Following a fall. The radar measuring 5,7 x 2,2 m was installed 1,5 metres above the ground with sampling rate of 200 Hz sufficient to obtain high-frequency radar signals that are generated during falls (60 Hz). Samples in the dataset included both fall/non-fall activities of experimental humans(10) in the age range of 20 to 35. The exercises involved standing and falling in directions perpendicular to vision of radars, walking and falling at different distances (three to four meters) from the radars and side rolls with/without movements in lying positions, upright positions, and lying down positions with or without upright positions. The subjects took 15 seconds for operations from which signals were digitalized for 200 Hz sample rates. The radar utilised in this investigation had its range set to 10 metres. There are 189 range bins due to the 5,35 cm range resolution. Table 1 lists the many actions that were conducted, along with their types and numbers. One of the individuals’ postures is shown in figure 2 before and after a fall incidence. The information was manually classified as falling or not. The University of Ottawa’s Research Ethics Board granted the experiments their blessing in terms of ethics.

Figure 2. Room based postures (a) Before falls (b) After falls

|

Table 1. Types and Activity Counts of 10 Different Subjects in Two Disparate Rooms |

|||

|

Class |

Description |

# of Exp. |

# of Exp. After augmentation (x 10) |

|

Fall Fall Fall

Non-Fall Non-Fall |

Stand in front of the radar and fall down Walk toward the radar and fall down Stand and fall down perpendicularly to the radar line of sight Lie down and stand up Lie down and stand up perpendicularly to the radar line of sight |

61 59

67 85 64 |

610 590

670 850 640 |

Selecting Target Ranges

Radar’s return signals were stored into matrices, where rows denoted observations of time interval ranges while columns denoted spatial samples obtained across multiple ranges (fast/slow times). The initial twenty 20 range bins represented all ranges and ranges lesser than 1 m from radars were eliminated as noise.

(a)

(b)

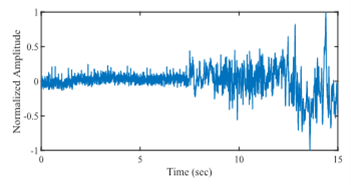

Figure 3. Return radar signals from targeted range bins (a) In Falls (b) In Standing up

Figure 3 (a) and (b) show return normalized radar signals where max. amplitudes for target range bins after eliminating clutters corresponding to standing/stooping activities were used. In figure 3 (a), the subject is in a standing position before the radar before falls, whereas figure 3 (b) shows subject lying down before rising to face the radar.

Analysing Time Frequencies

It is well known that moving human individuals produce nonstationary radar return signals with changing frequency components. Time frequencies were generated using STFTs (short-time Fourier transforms) for radar return signal investigations.(25) Targeted range bins x [·] for STFTs is depicted in the equation below equation (1):

![]()

Where W [·] stands for sliding window function’s finite lengths like Hamming windows, n represents time indices k = 0,1,...,N-1 are indices of the frequencies while N implies counts of frequency points. STFT magnitude’s squares yield spectrograms, i.e., S(n,k)=|X[n,k]|2. Two hundred and fifty-six sample Hamming windows were applied on radar data with reduced side lobes and window lengths inverse to main lobes. The study used 80 % overlaps between consecutive windows to reduce influences of spectral leakages and better localizations of falls. Reduced overlaps resulted in lesser efficient performances. For instance, 50 % overlapped localizations of falls. The entirety of the event is caught in at least one window with bigger overlaps.(26) Illustrated in figure 4.

Figure 4. Signature Time frequencies of (a) Falls (b) Standing

Generating Binary Images

Fall/non-fall activities caught by radars were analysed and represented as binary images. Sizes of binary pictures correspond to m1 frequencies and m2 time instants when spectrograms of m1×m2 pixels are computed. The genuine profile of the activity under research may be hidden by the high degree of noises present in raw spectrogram pictures which might result in worse classification results, especially while using neural networks. This was handles by creating binary time-frequency signatures of activities from time-frequency representations, or pixels using Hybrid k-means clustering. Median filters were used to remove outliers in clusters and morphological opening procedures eliminated disconnected sections for preserving energy content shapes. This is accomplished by developing a structural element,(27) which identifies the processed pixel in the picture together with its immediate neighbours. The signature of many actions is the end outcome of this postprocessing. Binary time-frequency signatures for falls are depicted in figure 4 while figure 5 depicts non-fall spectrograms.

Figure 5. Binary Signatures of time frequencies for (a) Falls (b) Erect positions

HOCNN

Automatic extractions for features do not result in required domain features. Neural networks were proposed for automatic extractions of features from binary images of radars using convolutions and fully connected layers. Time-frequency representations of activity energies are transformed into binary pictures (Z), as was covered in Section III-C. The CNN is then given the image. The fact that this network includes many processing units including convolution, pooling, activation, and normalisation is its fundamental feature. HOCNN has investigated the use of neuro-evolution in the automatic construction of CNN topologies in this study and has also developed a unique solution that is based on the ABC and GWO algorithms.

Overview of GWO algorithm

Algorithmic GWO as described by Karthiga et al.(28), imitates social behaviours exhibited by grey wolves in hunts and pursuits. Grey wolves have a rigid dominance structure based on leadership qualities and frequently live in packs of five to twelve members. The most notable wolf, referred to here as wolf, typically serves as the pack leader. The 2nd and 3rd level wolves in GWO are named beta and gamma. These subordinate wolves in the second and third ranks help the alpha wolf make decisions on the hunt for prey. All other wolves who follow these high rank wolves are identified as wolves, and they do so in order to pursue and approach their prey.

The following is a description of the mathematical model of the GWO that is based on grey wolves’ social hierarchies and their methods of circle, stalk and attack while attacking preys.

Social structure: Initially, specified counts of wolves (solutions) are placed at randomized locations within search spaces. According to their ranks, the topmost solutions are namely alpha (α), beta (β) and delta (δ) wolves. These three wolves serve as the primary guiding forces in GWO’s optimization process, with the others being viewed as omega (ω) wolves.

As was already mentioned, the tactic of surrounding the target is used during hunting. For iteration t, the following is the mathematical formulation of this strategy follows the equation (2) and (3):

![]()

Where, A ⃗ and C ⃗ stand for coefficient vectors and defined as A ⃗=2.a ⃗.(r1) ⃗-a ⃗ and C ⃗=2.(r2) ⃗ and where randomized vectors r1,r2 ∈ [0,1] and a ⃗= a1 (1-t/tMax), reduces linearly from a1 to 0 where the initial value of a1 equals to 2 in GWO and tMax stands for iterations counts.

Hunting: GWO’s hunting processes result in three best solutions (Wolves) i.e. α, β, and δ. Omega wolves update their locations based on the aforesaid leading solutions and can be represented mathematically as equation (4) and (5):

Attacking preys (Exploitations): GWOs are controlled by a (parameter), which slowly reduce in iterations. The parameters A ⃗ and C ⃗ also are used in controlling searches for preys where the former varies between −2a to 2a and on reaching values lesser than 1 prey are attacked by wolves.

Searches for preys (explorations): A ⃗ controls explorations of GWO while |A ⃗| > 1 diversifies searches.

A ⃗ and C ⃗ control explorations and get decreased during successive generations. Due to the fact that the solutions in the pack exchange little information, this finding suggests that the candidate solutions from the search space are not well-known. Many studies pointed out GWO’s inadequacies in explorations as significant issues. In order to address this issue, this study modifies the traditional GWO.

Overview of ABC algorithm

Algorithmic ABC use spectators and scouts of bees to mimic honey bee swarm behaviours as Karaboga et al.(29) specified. Nectar quantities contained in food sources are considered while determining fitness’s, and locations of food sources stand for distinct candidate solutions. This replicates how honey bees hunt for food. There are exactly the same numbers of bees working and observing, or about 50 % of populations (colony sizes). Employed bee notifies spectator bee of the new source and modifies her present one in accordance with the location in her memory. Observer bees investigate new neighbourhoods based on the information they have learned. In ABC, the search strategies are controlled by replacing random elements in solution vectors with other solution vectors in accordance to equation (7).

![]()

Where, vij represents new solutions got by mutating two different solution’s jth dimension in packs. The term ∅ij are random values in the interval [−1,1]. Although ABC’s updates allow for greater investigations, they fall short in terms of knowledge applications for ideal solutions. It has been noted that the ABC algorithm behaves differently from other algorithms and does not use best solutions to guide searches. The algorithm’s rate of convergence may decrease as a result of this. It has been observed that the information about the best solutions is crucial for enhancing convergence performance. GWOs make use of best in hierarchical leadership practises and combining it with ABC results in an efficient algorithm which enjoys the benefits of both.

Hybrid GWO with ABC

Figure 6 shows the suggested GWO-ABC algorithm’s step-by-step flowchart. GWO-ABC resembles standard GWO except for the use of additional strategies in initializations of population and exchanges of information. Before computing future parameters (a, A, and C), initial values of population sizes (N), dimensions of solution spaces (dim), and maximum evaluations by functions are defined. As a consequence, the flow chart is divided into three phases after defining initial parameters namely population initializations, GWOs, and ABCs. Below is an explanation of how each of these three stages functions.

Figure 6. Flowchart of proposed GWO-ABC algorithm

Population Initialization Phase: to provide more suitable and immediate potential remedies, population initializations using chaotic maps and OBL methodology are used to broaden search spaces in figure 6. Phases 1 and 2 determine initial populations. X∈|N| with ch(k) which are randomized variables from logistic chaotic maps where logistic chaotic evolution function can be depicted as equation (8).

![]()

Where, k stands for iteration counts (max 300) while initial values ch (0) are selected randomly.

Additionally, opposite population sets X* are derived using step 4 equation (OBL methodology) and both sets are joined to form X = (Xi ∪ Xi*) ∈|2N| solutions with their computed fitness f(X). Subsequent steps sort fitness vectors based on elitism principles and initial N fittest solutions are chosen for subsequent generations.

The initial population formed using the GWO’s random distribution approach is compared to the suggested population initialization scheme in figure 2. In this case, population sizes of both techniques were N = 100 for f9 functions with dimension of n = 3. As a result, we can see that the suggested scheme’s initial population of potential solutions is evenly dispersed over the search space, enabling exploration.

GWO Phase: after generating the starting population, the algorithm moves forward as usual, updating its parameters and search agents’ current locations using equations (1) to (3).

ABC phase: working bees and observers share knowledge with candidate solutions resulting in changes to prior solutions using equation (7). Unpredictability and non-repetitions are minimized by logistic chaotic maps as stated by equation (8) which define the term I in equation (7).

By picking arbitrary neighbouring solutions and a place for information sharing, this improves exploration opportunities.

The entire process (GWO and ABC) Phases are executed for getting best solutions. Global search abilities are improved by search equations of ABC, or equation (6) in GWO, as pack members can exchange information with others. As a result, it aids in maintaining essential exploration and exploitation, lessens the issue of variety, and avoids hasty convergence. Additionally, it provides a greater opportunity to leave local optimums and go for globally optimal solutions.

HOCNN

Hyperparameter selection in the suggested CNN was carried out using the proposed GWO-ABC algorithm approach. It included adjusting the model’s hyperparameters to different values and selections of optimal values based on validation accuracies.(30) Hyper-parameters of this work are learning rates, which regulate changes in sizes of weights during classification errors and drop out regularisations which restrict network adaptations to training data thus avoids over fits and issues of high dimensionalities.

Neural Networks are constructed with two convolution and four FC layers for binary classifications. In convolution layer 1 c1,64 kernels {kjc1}j=164 of size 3×3 were convolved with image inputs (stride=1). Bias values are get added and activations of output values results in feature maps with depths of 64. RELU (rectified linear unit) activations defined as f(x)=max (0, x), were used where convolution layer was connected to previous layer feature map’s local patches by kernels.

Non-overlapping 22 max-pooling layers p1 were utilised for producing distortion invariance in features and reducing spatial resolutions of feature maps. The neighbouring neurons of prior convolutions were chosen for pooling based convolved feature’s higher values. Since spatial sizes of feature maps reduce after pooling processes, additional filters were needed between 1st and 2nd convolution layers to enhance depths. Feature maps Zc2 with depths of 128 were produced after additions of bias values and REL activations applied to outputs. Subsequently, 22 second MP layer P2 were added. Convolution layers recorded lower level features while higher layers combined these inputs to extract higher-level features. Additionally, same feature map’s neurons may get weight distributions from convolution layers. The proposed network has four FC levels after the convolution layers and singular output layers for predicting classes. FC layers had 500/200/100/50 neurons. The parameters from second convolution layer outputs, Zc2p2, were joined to form single dimension vector to generate inputs to first FC layers. FC layer neurons are coupled with other layer neurons where activations were determined by executing matrix multiplications, bias offsets, REL activations for 4 layers while SoftMax functions given in equation (9) for output layers.

![]()

Where Zr represents rth scores of output layers and hr stands for outputs of SoftMax functions i.e., probabilities of predictions in classes. If stands for trial counts, actual labels of fall/non-falls are {li}i=1Ntr where probabilistic outcomes (hi) and cross entropy cost functions () can be defined as equation (10):

![]()

Where I(.) is the indicator function.

Thus, features are learnt and classification processes enhanced by optimizations resulting in better discriminations in final classifications.

Data Augmentations

There aren’t many fall statistics since people don’t trip and fall regularly. Data augmentations are executed for better generalizations of fall detections as they artificially increase sample counts or patterns in training sets. By doing this, network overfitting is avoided. There are several ways to enhance the information supplied in the books. Data warping and oversampling are two categories into which they are frequently subdivided.(31) By using oversampling strategies like random oversampling or synthetic minority oversampling, the number of exemplars is purposefully raised. The exemplars are just duplicating of the ones that already exist in the dataset. Data warping creates exemplars that are distinct from the ones already present in the dataset by transforming images using techniques like geometric transforms, flips, crops, and transformations. Radars’ height and angle, return power, clutter presence or absence, and other factors all play a role in fall detection. Data augmentation is required since a dataset cannot account for all of these variations. Because it won’t create exemplars that are typical of these variabilities, oversampling augmentation is inappropriate. To recognise this feature variability and provide physiologically accurate and perceptive interpretations of activities and changes in images were employed using image rotations, width shifts, height shifts, horizontal flips, shears and zooms. While patterns from height shifts and zooms may depict falling at different angles with respect to radars, patterns obtained from width shifts and horizontal flips may depict falls or non-falls at varying distances from radars’ sensors. The suggested CNN, it should be noted, tries to extract robust characteristics to accurately identify a range of cases. Only when the network is exposed to a substantial quantity of examples of various sorts can it acquire such strong properties.

RESULT AND DISCUSSION

The experimental findings that were obtained utilising the suggested approach are reported in this section. An AEs (auto encoders),(15) an SVMs,(30) DTs, and KNNs(31) are used to compare the performance of the CNN. The efficacies of suggested fall detections were evaluated using radar data collected using Section II’s procedures where radar return signals formed spectrograms. The energy content of a certain activity was seen in the spectrogram as a picture. The morphological opening technique was used to binarize and further improve the picture. When conducting the tests, the values of m1 and m2 were set to 129 and 139, respectively, to fix the picture size that was covered in Section III-C. To get enough information to train the suggested CNN, the resultant binary picture was enhanced. The trained network is then applied to evaluate the representation of a test picture as a fall incidence. The effectiveness of the proposed method for data classification was evaluated by a fivefold cross validation. To facilitate the creation of metrics, table 2 defines true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN) that link activities of falls and non-falls. Diagonal items are appropriate classification rates for activities of falls/non-falls and entries away from diagonals present rates of misclassifications.

|

Table 2. Fall Detection Confusion Matrix |

||

|

Classes |

Falls |

Non-falls |

|

Falls |

TP |

FP |

|

Non-falls |

FN |

TN |

The following metrics are used to evaluate the performance of the proposed fall detection method:

· Precisions (PR), PR = TP/TP+FP

· Recalls or sensitivities (SE), SE = TP/TP+FN

· Specificities (SP), SP = TN/TN+FP

· False Positive Rates (FPR), FPR = FP/FP+TN

· False Negative Rates (FNR), FNR = FN/FN+TP

· F-scores, F = 2TP/2TP+FP+FN.

|

Table 3. Cross validation values of leave-one-subject for PR, SP, SE, FPR, and FNR (%) from methods a |

||||||||||

|

|

No Augmentations |

With Augmentations |

||||||||

|

Method |

PR |

SP |

SE |

FPR |

FNR |

PR |

SP |

SE |

FPR |

FNR |

|

LSVM |

77,32 |

89,52 |

76,89 |

23,11 |

10,48 |

79,56 |

77,12 |

73,57 |

26,43 |

22,88 |

|

GSVM |

78,78 |

88,34 |

78,12 |

21,88 |

11,66 |

83,66 |

81,34 |

79,12 |

20,88 |

18,66 |

|

KNN |

85,89 |

90,42 |

86,10 |

13,9 |

9,58 |

87,92 |

91,51 |

86,22 |

13,78 |

8,49 |

|

AE |

89,03 |

90,42 |

88,15 |

11,85 |

9,58 |

87,64 |

93,33 |

88,02 |

11,98 |

6,67 |

|

CNN |

92,97 |

93,18 |

92,79 |

7,2 |

6,82 |

91,27 |

95,19 |

91,68 |

8,32 |

4,81 |

|

Proposed HOCNN |

94,21 |

94,63 |

93,55 |

6,3 |

5,12 |

92,88 |

96,63 |

93,12 |

9,12 |

3,98 |

Table 3 lists several classification metrics that were acquired utilising the suggested approach as well as those from the alternatives namely KNNs, DTs, AEs, and SVMs where vectorized binary images from Section III-C were classifiers’ inputs.

Tables 4 and 5 show the classification metrics derived from five-fold cross validations of the suggested fall detections using other methods on the original data, where improvements were observed up to a factor of 10. These tables show that higher values of accuracies, precisions, and specificities, falls can be identified before their occurrences, preventing false alarms in actual falls. The suggested schema achieves 96,21 % classification accuracy when compared to LSVMs, GSVMs, KNNs, AEs, and CNN which achieved 89,01 %, 85,71 %, 92,84 %, 92,85 %, and 95,83 % in classifications without data augmentation. It represented as figure 7.

|

Table 4. Performances of Classifiers |

|||||

|

Method |

Accuracy |

Precision |

Sensitivity |

Specificity |

F-Score |

|

LSVM |

89,01 |

90,69 |

89,95 |

88,32 |

90,17 |

|

GSVM |

85,71 |

90,75 |

86,43 |

87,88 |

87,77 |

|

KNN |

92,84 |

92,94 |

94,52 |

91,78 |

93,41 |

|

AE |

92,85 |

93,25 |

93,40 |

92,34 |

93,30 |

|

CNN |

95,83 |

98,37 |

94,37 |

97,82 |

96,28 |

|

Proposed HOCNN |

96,21 |

98,99 |

95,12 |

98,56 |

97,52 |

Figure 7. Metrics of performances for various classifiers without data augmentations

The comparison of the different classifiers is shown in figure 7. These values demonstrate the ability to more precisely identify falls when they happen avoiding false alarms from the suggested approach’s increased accuracy, precision, and specificity values in fall detection.

Figure 8. Metrics of performances for classifiers after data Augmentations

After data augmentation, figure 8 compares the various classifiers. These values demonstrate the ability to more precisely identify a fall occurrence when it happens and avoid false alarms by displaying the suggested approach’s increased accuracy, precision, and specificity values in fall detection.

|

Table 5. Performance metrics for various classifiers after data Augmentation |

|||||

|

Method |

Accuracy |

Precision |

Sensitivity |

Specificity |

F-Score |

|

LSVM |

78,24 |

80,44 |

81,30 |

75,83 |

81,71 |

|

GSVM |

83,52 |

87,37 |

84,54 |

83,43 |

86,45 |

|

KNN |

90,33 |

90,73 |

92,47 |

88,59 |

91,28 |

|

AE |

90,89 |

90,90 |

92,97 |

88,85 |

91,72 |

|

CNN |

93,54 |

94,04 |

94,22 |

92,54 |

92,65 |

|

Proposed HOCNN |

94,63 |

95,01 |

95,62 |

93,68 |

93,32 |

Table 5 further demonstrates that when the data are supplemented, as indicated by the improved value of specificity, the suggested method has a lower false alarm rate when it comes to detecting fall incidents. Overall, the results show that the suggested method works well for distinguishing between activities that happen in the fall and those that don’t. The suggested model more accurately determines saliencies of signals in feature representations which are better than compared approaches, the suggested CNN-based fall detection method outperforms the others. The hierarchically distributed representations used by CNNs.

CONCLUSIONS

A major issue in contemporary medicine, and specifically geriatrics, is the absence of reliable non-contact methods for automated detections of falls which may lead to life-threatening diseases. This study offered a unique time-frequency analysis and deep learning-based radar-based fall detection approach. Data on fall and nonball activities were gathered in room conditions with no restrictions. The target range bin and clutter effects were determined from the radar return data through pre-processing. The STFTs were used for time-frequency analysis in order to generate spectrograms for various activities. The binary pictures created from the spectrograms were then improved using morphological operators. Class-preserving modifications were used to enhance the binary pictures before they were input into the proposed HOCNN for feature extraction. Layers that are completely linked and convolutional were stacked to create the suggested network. To assess the effectiveness of the suggested fall detection technique and to contrast it with those of the other approaches, a number of tests were carried out. The outcomes showed that the suggested strategy outperforms other methods in terms of accuracy, precision, sensitivity, and specificity. Additionally, studies were conducted to determine the efficacy of the suggested fall detection technique when the network was trained using information gathered from several individuals in one room and evaluated with information gathered from other individuals in a different room. The suggested method’s robustness is indicated by the higher classification metrics.

BIBLIOGRAPHIC REFERENCES

1. United Nations Department of Economic and Social Affairs, Population Division. World Population Prospects 2019: Highlights.

2. United Nations Department of Economic and Social Affairs, Population Division. World Population Ageing 2019: Highlights.

3. World Health Organization. Global report on falls prevention in older age. WHO; 2020.

4. Little L, Briggs P. Pervasive healthcare: the elderly perspective.

5. Edfors E, Westergren A. Home-living elderly people’s views on food and meals. J Aging Res, 2012, pp. 761291. https://doi.org/10.1155/2012/761291.

6. Corbishley P, Rodriguez-Villegas E. Breathing detection: Towards a miniaturized, wearable, battery-operated monitoring system. IEEE Trans Biomed Eng. 55(1), pp. 196–204. https://doi.org/10.1109/TBME.2007.912639.

7. Chen VC, Tahmoush D, Miceli WJ. Radar Micro-Doppler Signatures: Processing and Applications. Radar, Sonar, Navigation and Avionics. London: Institution of Engineering and Technology.

8. Bryan JD, Kwon J, Lee N, Kim Y. Application of ultra-wideband radar for classification of human activities. IET Radar Sonar Navig, 6(3), pp. 172–9. https://doi.org/10.1049/iet-rsn.2011.0165.

9. Hazelhoff L, Han J, de-with PHN. Video-based fall detection in the home using principal component analysis. In: Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, pp. 298–309.

10. Ding C, Wang L, Jiang H, Wang Q. Non-contact human motion recognition based on UWB radar. IEEE Trans Emerg Sel Topics Circuits Syst, 8(2), pp. 306–15. https://doi.org/10.1109/JETCAS.2018.2843262.

11. Abdelhedi S, Bourguiba R, Mouine J, Baklouti M. Development of a two-threshold-based fall detection algorithm for elderly health monitoring. In: Proceedings of the IEEE International Conference on Research Challenges in Information Science, pp. 1–5. https://doi.org/10.1109/RCIS.2016.7549358.

12. Bjorklund S, Petersson H, Hendeby G. Features for micro-Doppler based activity classification. IET Radar Sonar Navig, 9(9), pp. 1181–7. https://doi.org/10.1049/iet-rsn.2014.0254.

13. Wu Q, Zhang YD, Tao W, Amin M. Radar-based fall detection based on Doppler time-frequency signatures for assisted living. IET Radar Sonar Navig, 9(2), pp. 164–72. https://doi.org/10.1049/iet-rsn.2014.0231.

14. Han J, Zhang D, Cheng G, Liu N, Xu D. Advanced deep-learning techniques for salient and category-specific object detection: A survey. IEEE Signal Process Mag, 35(1), pp. 84–100. https://doi.org/10.1109/MSP.2017.2766795.

15. Jokanovic B, Amin M, Ahmad F. Radar fall motion detection using deep learning. In: Proceedings of the IEEE Radar Conference, pp. 1–6. https://doi.org/10.1109/RADAR.2016.7485164.

16. Wagner D, Kalischewski K, Velten J, Kummert A. Activity recognition using inertial sensors and a 2D convolutional neural network. In: Proceedings of the International Workshop on Multidimensional Systems, pp. 1–6.

17. Lang Y, Hou C, Yang Y, Huang D, He Y. Convolutional neural network for human micro-Doppler classification. In: Proceedings of the European Microwave Conference, pp. 1–4. https://doi.org/10.23919/EuMC.2017.8230918.

18. Maitre J, Bouchard K, Gaboury S. Fall Detection with UWB Radars and CNN-LSTM Architecture. IEEE J Biomed Health Inform, 25(5), pp. 1273–83. https://doi.org/10.1109/JBHI.2021.3062385.

19. Saho K, Hayashi S, Tsuyama M, Meng L, Masugi M. Machine Learning-Based Classification of Human Behaviors and Falls in Restroom via Dual Doppler Radar Measurements. Sensors, 22(5), pp. 1721. https://doi.org/10.3390/s22051721.

20. Yang T, Cao J, Guo Y. Placement selection of millimeter wave FMCW radar for indoor fall detection. In: Proceedings of the 2018 IEEE MTT-S International Wireless Symposium (IWS), pp. 1–3. https://doi.org/10.1109/IEEE-IWS.2018.8400908.

21. Mager B, Patwari N, Bocca M. Fall detection using RF sensor networks. In: Proceedings of the 2013 IEEE 24th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), pp. 3472–6. https://doi.org/10.1109/PIMRC.2013.6666700.

22. Cameiro SA, da Silva GP, Leite GV, Moreno R, Guimaraes SJF, Pedrini H. Multi-stream deep convolutional network using high-level features applied to fall detection in video sequences. In: Proceedings of the International Conference on Systems, Signals and Image Processing (IWSSIP), pp. 293–8. https://doi.org/10.1109/IWSSIP.2019.8787290.

23. Casilari E, Lora-Rivera R, García-Lagos F. A study on the application of convolutional neural networks to fall detection evaluated with multiple public datasets. Sensors. 20(5), pp. 1466. https://doi.org/10.3390/s20051466.

24. Novelda’s XeThru X4M03. 2018.

25. Stankovic L, Dakovic M, Thayaparan T. Time-Frequency Signal Analysis with Applications. Norwood, MA: Artech House.

26. Erol B, Francisco M, Ravisankar A, Amin M. Realization of radar-based fall detection using spectrograms. In: Compressive Sensing VII: From Diverse Modalities to Big Data Analytics. https://doi.org/10.1117/12.2306892.

27. Gonzalez RC, Woods RE. Digital Image Processing. 3rd ed. Englewood Cliffs, NJ: Prentice-Hall.

28. Karthiga M, Santhi V, Sountharrajan S. Hybrid optimized convolutional neural network for efficient classification of ECG signals in healthcare monitoring. Biomed Signal Process Control, 76, pp. 103731. https://doi.org/10.1016/j.bspc.2021.103731.

29. Li JQ, Pan QK, Xie SX, Wang S. A hybrid artificial bee colony algorithm for flexible job shop scheduling problems. Int J Comput Commun Control, 6(2), pp. 286–96. https://doi.org/10.15837/ijccc.2011.2.2499.

30. Liu L, Popescu M, Skubic M, Rantz M, Yardibi T, Cuddihy P. Automatic fall detection based on Doppler radar motion signature. In: Proceedings of the International Conference on Pervasive Computing Technologies for Healthcare, pp. 222–5. https://doi.org/10.4108/icst.pervasivehealth.2011.246050.

31. Yu M, Rhuma A, Naqvi SM, Wang L, Chambers J. A posture recognition-based fall detection system for monitoring an elderly person in a smart home environment. IEEE Trans Inf Technol Biomed, 16(6), pp. 1274–86. https://doi.org/10.1109/TITB.2012.2203313.

FINANCING

The authors did not receive financing for the development of this research.

CONFLICT OF INTEREST

The authors declare that there is no conflict of interest.

AUTHORSHIP CONTRIBUTION

Conceptualization: Nester Jeyakumar M.

Data curation: Jasmine Samraj.

Formal analysis: Bennet Rajesh M.

Research: Nester Jeyakumar M.

Methodology: Bennet Rajesh M.

Drafting - original draft: Nester Jeyakumar M.

Writing - proofreading and editing: Jasmine Samraj.