doi: 10.56294/sctconf2024.1211

ORIGINAL

Object detection and state analysis of pigs by deep learning in pig breeding

Detección de objetos y análisis de estado de cerdos por aprendizaje profundo en cría de cerdos

Xiaolu Zhang1 ![]() *, Jeffrey Sarmiento1

*, Jeffrey Sarmiento1 ![]() , Anton Louise De Ocampo1

, Anton Louise De Ocampo1 ![]() , Rowell Hernandez1

, Rowell Hernandez1 ![]()

1College of Engineering, Batangas State University, the National Engineering Alangilan Campus. Batangas City 4200, Philippines.

Cite as: Zhang X, Sarmiento J, De Ocampo AL, Hernandez R. Object detection and state analysis of pigs by deep learning in pig breeding. Salud, Ciencia y Tecnología - Serie de Conferencias. 2024; 3:.1211. https://doi.org/10.56294/sctconf2024.1211

Submitted: 05-03-2024 Revised: 15-07-2024 Accepted: 11-10-2024 Published: 12-10-2024

Editor:

Dr.

William Castillo-González ![]()

Corresponding Author: Xiaolu Zhang *

ABSTRACT

Introduction: attack behavior is common in intensive pig breeding, where the hard conditions of the piggery can lead to illness or even death for the pigs. High labor expenses will result from manually observing and recognizing pig attack behaviors in intensive porcine breeding operations. Objective: This study aims to employ deep learning techniques to identify and classify various aggressive behaviors in pigs, enhancing monitoring efficiency in breeding facilities.

Method: a novel ladybug beetle-optimized adaptive convolutional neural network (LBO-ACNN) was proposed to recognizepig behavior in pig breeding. Pigs’ object detection dataset was gathered for this investigation. The data preprocessed using discrete wavelet transform (DWT) eliminates noise from each frequency component by breaking down the image into its elements. The proposed method is implemented using Python software. The proposed method is compared to other algorithms.

Result: the investigational outcome shows that the suggestedstrategy accurately identifies pig behaviors, achieving a high F1-score (93,31 %), recall (92,51 %), precision (94,17 %), and accuracy (94,78 %) demonstrating its effectiveness in monitoring and classifying behaviors in breeding facilities.

Conclusion: the research accomplished the recognition and classification of pigs’ movement length and behavior in their normal habitat, as well as providing technological assistance for the investigation of pig social hierarchy and pig health and behavior selection.

Keywords: Pig Breeding; Healthcare; Behavior; Discrete Wavelet Transform (DWT); Ladybug Beetle Optimized Adaptive Convolutional Neural Network (LBO-ACNN).

RESUMEN

Introducción: el comportamiento de ataque es común en la cría intensiva de cerdos, donde las duras condiciones de la cerda pueden conducir a la enfermedad o incluso la muerte de los cerdos. Los altos gastos de mano de obra resultarán de observar y reconocer manualmente los comportamientos de ataque de cerdos en las operaciones de cría intensiva de cerdos. Objetivo: este estudio tiene como objetivo emplear técnicas de aprendizaje profundo para identificar y clasificar diversos comportamientos agresivos en cerdos, mejorando la eficiencia de monitoreo en instalaciones de cría.

Método: una nueva red neuronal convoluadaptadaptoptimipara el remolacha de Ladybug (LBO-ACNN) fue propuesta para reconocer el comportamiento de zepig en la cría de cerdos. El conjunto de datos de detección de objetos de los cerdos fue reunido para esta investigación. Los datos preprocesados utilizando la transformada wavelet discreta (DWT) elimina el ruido de cada componente de frecuencia al descomponer la imagen en sus elementos. El método propuesto es implementado usando software Python. El método propuesto se compara con otros algoritmos.

Resultados: el resultado de la investigación muestra que la estrategia sugerida identifica con precisión los comportamientos de los cerdos, logrando un alto F1-score (93,31 %), recuerdo (92,51 %), precisión (94,17 %) y precisión (94,78 %) demostrando su efectividad en el monitoreo y clasificación de comportamientos en instalaciones de cría.

Conclusiones: la investigación logró el reconocimiento y clasificación de la longitud de movimiento y comportamiento de los cerdos en su hábitat normal, así como proporcionar asistencia tecnológica para la investigación de la jerarquía social de los cerdos y la salud y selección de comportamiento de los cerdos.

Palabras clave: Pig Breeding; Healthcare; Behavior; Discrete Wavelet Transform (DWT); Ladybug Beetle Optimized Adaptive Convolutional Neural Network (LBO-ACNN).

INTRODUCTION

Monitoring and identification of pigs and their states in large-scale production farms is one of the most significant issues investigated to promote productivity and optimize pig farming.(1) Breeding of pigs in recent times, particularly in large-scale production with a large population of sows and piglets, is a difficult task and demands close health control, feeding behavior, and productivity.(2) These are the present-day difficulties and could be mitigated when there is enhanced figuring capacity and enthusiastic learning such as computerized vision.(3)

Advanced methods include object detection algorithms, which allow for the identification of individual pigs within a group, and are applied in the observation of health indices, discovery of abnormalities, and examination of behavioral dynamics and variations.(4) State analysis involves various aspects like activity level, feeding, and social behavior among animals. It can be helpful to increase the quality of the breeding of pigs, to assess the care provided for pigs, and to advance the efficiency of the pigs’ performance.(5) Computer-aided systems for object recognition and state assessment in pigs can aid in the identification of potential illnesses, disease control measures, and optimal use of the available resources.(6) Furthermore, the combination of these technologies not only improves the management performance but also promotes the welfare of the pigs because it allows a deeper and wider visualization of their requirements and environment.(7)

Analysing potential approaches to develop a DL model appropriate for continuous, round-the-clock pig posture detection was the goal of research.(8) A DL framework was chosen among more than 150 network configurations that covered trials with four base networks, three detection heads, five transfer datasets, and twelve data augmentations. A novel framework was established by study to identify and quantify the degree of interactions among pigs in groups from one pig head to another between the pigs.(9) Only low-cost camera-based data collection equipment was employed in the approach that eliminates the need for individual pig tracking and identity. To determine whether such models could handle the particular difficulties, data from the area of Pig-Focused Livestock Farm was subjected to the DL segmentation designs in paper.(10)

A structure for automatic recognition of community relationships was proposed study.(11) Pig motions were monitored inside that framework using the KF approach, and the position and direction of the pigs within a video were identified using CNN. An innovative approach to the current issues with automating the identification of eating behaviours in pigs was presented by research.(12) Automatically monitoring approach designed to estimate the eating behavior of group-housed farmed pigs using video surveillance. Two DL-driven detector algorithms were designed by study to recognize pig’s posture and drinking behaviors in group-housed pigs.(13) A novel approach based on CNN was developed by paper to detect several forms of social interaction among pre-weaning pigs. These behaviors include aggressive/playing behavior and social nosing that involve both nose-snout and nose-body interactions.(14)

Computer vision-based techniques used to identify pigtail biting were developed by study.(15) Integrating secondary systems was responsible for temporal information with a CNN to extract spatial information. An innovative technique to effectively locate and recognize tail-biting behaviors in group-housed pigs was developed by study using a computer-vision-based methodology.(16) That used a tracking-by-detection technique to reduce group-level behavior to paired connections. Next, to extract the spatial-temporal information and categorize behavior CNN and RNN were integrated. To identify violent incidents in pigs, a deep learning technique employing CNN and LSTM was developed by research.(17) The research processes video episodes directly instead of analyzing individual frames, in contrast to earlier deep learning-based studies on pig behavior. The location and posture of grouped pigs were recognized by paper using a computer vision system built on DL technology.(18)

The primary objective is to develop and implement the LBO-ACNN method to accurately recognize pig behaviors in intensive breeding environments, facilitating enhanced monitoring and management for optimized animal welfare, productivity, and farm efficiency.

The remaining research falls under the following categories: Part 2 presents our recommended technique. In part 3, the outcomes of our techniques will be evaluated. Part 4 presents the discussion. Part 5 concludes the study’s information.

METHOD

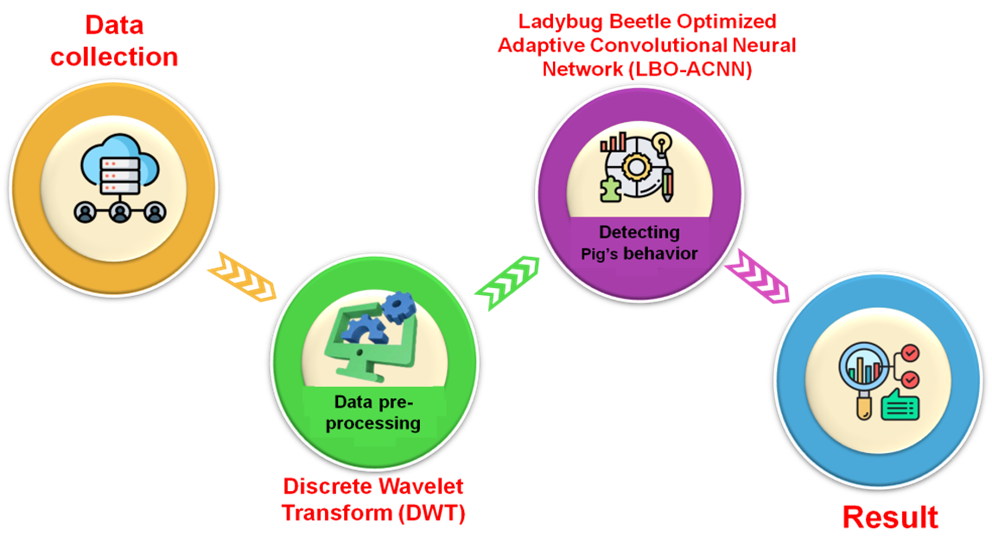

For the process of identifying the pig’s behavior, a pig object detection dataset was gathered. The collected data was then preprocessed using Discrete Wavelet Transform (DWT) to eliminate noise. With the Ladybug Beetle-Optimized Adaptive Convolutional Neural Network (LBO-ACNN) approach, the pig’s behavior was identified with high precision. An outline of the basic structure of the system has been illustrated in figure 1.

Figure 1. Fundamental structure of the system

Data collection

The open-source pig detection dataset was collected in Kaggle(Source: https://www.kaggle.com/datasets/trainingdatapro/farm-animals-pigs-detection-dataset). The dataset includes a set of diverse images, which are labeled with bounding boxes aimed at detecting pig heads. It covers a wide range of pig breeds, sizes, and orientations on pig, this helps in accommodating all kinds of pig appearances. This variety helps to develop a detection model with high performance, which can accommodate many conditions observed in practice.

Data pre-processing

Discrete Wavelet Transform (DWT) is an efficient mathematical tool for multiresolution analysis of signals and images, thus it is ideal for use in image data pre-processing. DWT has the added advantage of being able to break down non-stationary gestures into multiple frequency sub-bands, which is useful when dealing with images at a variety of resolutions. In the image processing field, DWT assists in the decomposition of the image data to sub-bands that express different qualities of the image at different resolutions. This capability is critical for the improvement of image quality through denoising and feature strengthening like edges and texture. The DWT technique then analyzes the original image into approximation and detail coefficients that represent low-pass and high-pass filter outputs, respectively. The decomposition process is performed with the help of Mallat’s algorithm, where these filters are used to separate the image into several frequency components. They are expressed as approximate coefficients (dB) and detail coefficients (dC), shown in equations (1 and 2), which are helpful in further analysis of the image data.

![]()

This includes several steps that are aimed at the decomposition and further reconstruction of the image data. For the current discourse, the original image data signal by and for the wavelet filter will be denoted. In DWT, the filters that are employed are the low-pass filter, which is represented by, and the high-pass filter, which is represented by. The multi-level decomposition method used is the Mallat algorithm. In the segmentation process, the original image data is passed through four low-passes and four high-pass filters. These result in two sets of coefficients: the first set is detailed coefficients and the second set is the approximate coefficients. To complete the process of retrieving dimension of the original image data, a reverse process called IDWT is used. IDWT reconstructs the data from the approximate and detailed coefficients and creates a sequence of the same size as the image in its initial form, but has different frequencies.

Detect pig’s behavior in pig breeding

This study uses a Ladybug Beetle-Optimized Adaptive Convolutional Neural Network (LBO-ACNN) to develop a new approach for recognizing pig behavior in the breeding context. Using the Ladybug Beetle Optimization (LBO) approach to optimize the Adaptive Convolutional Neural Network (ACNN) enhances accuracy and efficiency in behavioral analysis.

Ladybug Beetle-Optimized Adaptive Convolutional Neural Network (LBO-ACNN)

LBO-ACNNis a novel technique for enhancing the features of pig behavioral pattern recognition in breeding farms. This technique combines the one-foraging strategy from ladybug bugs with CNN’s feature extraction capability. The parameters of LBO-ACNN depend on the characteristics of pig behavior data while the types of pig behavior such as feeding, resting, and social behavior can easily be classified with the help of LBO-ACNN. The dynamic behavior of the LBO-ACNN also enables the network to learn and optimize for the necessary power and time to precision on the behavior detection with the input data.

This optimization process helps to decrease the training time and also enhances the generalization performance of the network across the datasets to be used in real-time applications in pig breeding. The integration of optimization based on ladybug beetles guarantees that the network can overcome the obstacles to interacting with behavioral data and then arrive at more accurate conclusions in the detection process. This method is a major innovation in the field of automatic behavior analysis and fills a gap in developing solutions for increasing the quality of animal husbandry and the overall organizational effectiveness in the case of pig breeding.

Adaptive Convolutional Neural Network (ACNN)

The ACNN improves on typical convolutional networks through altering the structures of its layers according to the characteristics of the input data. From the perspective of pig behavior identification, ACNN enhances feature extraction and classification achievements. Such flexibility helps to achieve more accurate identification and assessment of various actions of pigs, which contributes to improved assessment results in behavior recognition. Each axis of the time-frequency data, such as that obtained from wavelet transformations, represents different physical dimensions of the image. To effectively leverage this information in our model, two scaling maps: one for the frequency domain and one for the spatial domain were utilized. These maps are applied to each axis in the adaptive kernel of the Adaptive Convolutional Neural Network (ACNN) module.

The ACNN module begins by creating the two scaling matrices using pooling layers from the input:

![]()

Where G and X represent the input shapes and Din is the amount of input channels and Dout is the amount of output channels.

![]()

The frequency-domain scaling matrix.

![]()

Time-domain scaling matrixare presented in equations (3 and 4).

![]()

Here conv stands for 1D-CNN,LX and LG represent the kernel form, and its subscript represents the weighting size as (output channel, input channel, and kernel). The matrix:

![]()

Which is produced via global time-average pooling, is used to determine Ne(W), which the mean is across the time axis. The matrix:

![]()

Which is produced via global frequency-average pooling, is used to compute Ns(W), which is the mean along a frequency axis. The nonlinear function is presented as ReLU, and all convolutions have a kernel size of 3 with one stride and one zero padding. To create a 3D scalability matrix, the two scalability matrices are sent to:

![]()

To match the forms, and then they are combined using the element-wise accumulation for effective gradient flow. Equation (5) shows how the sigmoid function is applied to the combined scaling matrix to get the final scaling matrix:

![]()

Which has a value between 0 and 1.

![]()

Where, following the broadcasting of 2D scaling matrices to 3D, ⨂ indicates element-wise summing. The content-invariant kernel:

![]()

A trainable parameter is multiplied element-wise by every output channel of the produced 3D scaling matrix. For input W, it is the modified kernel.

![]()

As shown in equation (6).

![]()

Here ⨂ stands for multiplication of elements. With 1 ≤ j ≤ Dout, jth output channel kernels of L ̃j (W);

![]()

Ladybug Beetle Optimization (LBO) algorithm

The optimization to identify the pig behavior is enriched using LBO approach, which is developed from the foraging of the ladybugs. This feature of using dynamic control of the search parameters, LBO, thus provides a maximized solution for behavior classification tasks. This approach helps in identifying different patterns of pig behaviors more closely and helps in classifying them with better performance. The participant from the first phase could detect the location that is hotter than the other participant during this phase. The purpose of the mutation process in this proposed model is to balance the zones of exploration and exploitation. Using the developed model, a full member of the community is chosen to modify the group that includes each individual in the cycle. Another inventivespot of the ladybug is mathematically represented by equation (7). In this case, the parameter value is verified by equation (8), and the term Td is equivalent to the addition of dth ladybug value to the total value of the ladybug provided in the ith iteration.

Here, the cth ladybug is chosen using the roulette-wheel selection method to replace the dth ladybug. The separation of ladybugs (i) into unequal sections is determined by taking the distance between them from the range [0,1]. The first step involves assigning a random number to identify the ladybug’s location. The objective function is chosen using the ladybug that is present in the warmer position. Equation (9), which provides the vector connected with the population, is denoted as Y along with (i).

![]()

In this case, the most damaging outcome function for the present iteration is called gxt, and the wheel’s pressure coefficient is provided as β. If the term β is bigger, the wheel of roulette is utilized to choose the best ladybugs from the population. These procedures not only execute mutations randomly but also substantially speed up the search process. In equation 10, the decision variable count in the Ladybug is confirmed.

![]()

In this case, the decision variable length is given as M, and the mutation rate is given asμN. The variables in the dth ladybug are chosen at random for each analysis. Afterward, new random variables are added to the feasible region to choose the ladybug’s location. Equation 11 presents the ladybug count at each phase as well as the mathematical representations of the annihilation operation in the ladybug. In this case, the functional validation count is denoted by EF, and the maximum functional validation count is provided by EFNW. Equation 12 presents the updated count of ladybugs in all iterations after the number of iterations is supplied in the termination condition.

In this case, the term i represents the iteration, and the term iNW is the maximal iteration.

RESULTS

To estimate the performance of the suggested approach, parameters such as F1-score (%), recall (%), precision (%), and accuracy (%) were utilized. The method was compared with existing approaches, including Resnet MobileNet, YOLOX-DNAM, and YOLOX-NAM.

Experimental setup

On Windows 11, tasks were finished using Python 3.12. The CPU, an Intel Core i7 12th Gen, and memory capacity were disclosed. The hardware under evaluation was a contemporary laptop configuration that made it possible to record performance information for intensive multitasks and design tasks.

The collected data was presented as input to the system, which effectively identified the various pig behaviors. The result demonstrates the method’s effectiveness in detecting pig behaviors. Sample outputs are presented in figure 2.

Figure 2. Sample output

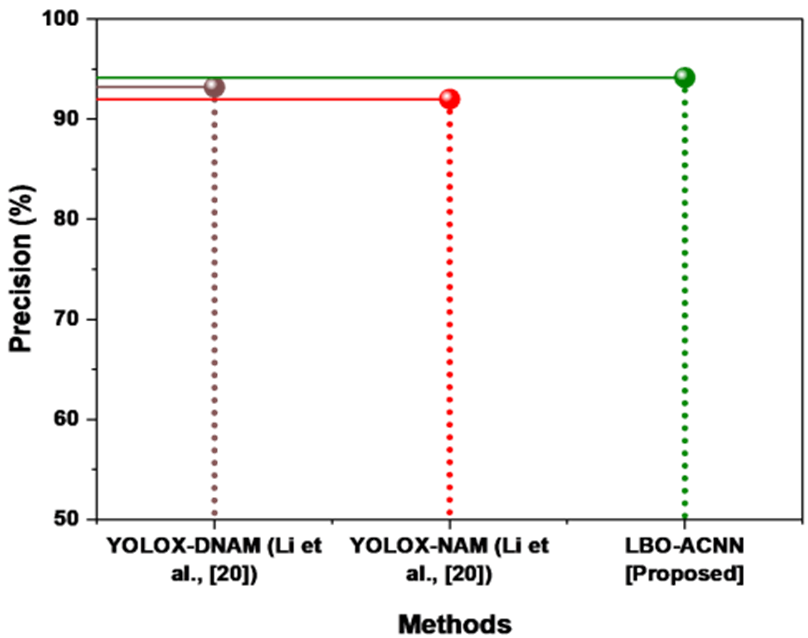

Precision specifies the ratio of true identifications out of all positive identifications made by the model. The suggested approach exhibited strong precision, signifying that when it predicted a behavior, as correct with high certainty. Comparingthe proposed LBO-ACNN method (94,17 %) with existing methods, such as YOLOX-NAM (92,01 %) and YOLOX-DNAM (93,21 %), shows a noticeable improvement in performance. It shows that our suggested approach performs better than the existing approaches. Figure 3 presents the result of precision.

Figure 3. Precision result

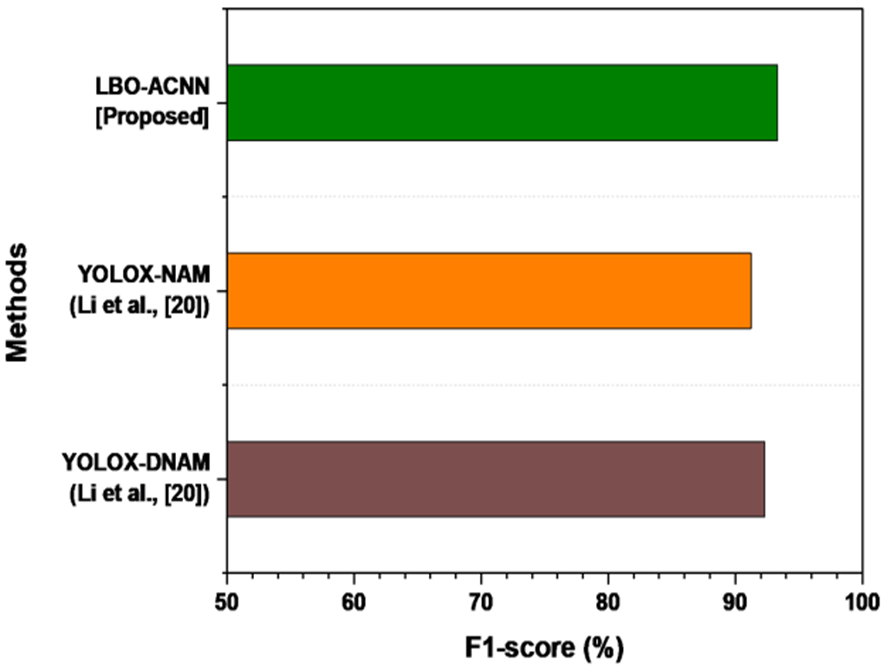

F1-score offers a balanced assessment of recall and precision, representing the symmetrical mean of these two metrics. The suggested method highlights its robust performance in maintaining a balance between two metrics, ensuring reliable behavior identification. Compared to existing techniques, such as YOLOX-NAM (91,22 %) and YOLOX-DNAM (92,31 %), our suggested LBO-ACNN approach (93,31 %) attains better F1-score results. It demonstrates how our suggested approach outperforms the current ones. Figure 4 displays the F1-score result.

Figure 4. Result of F1-score

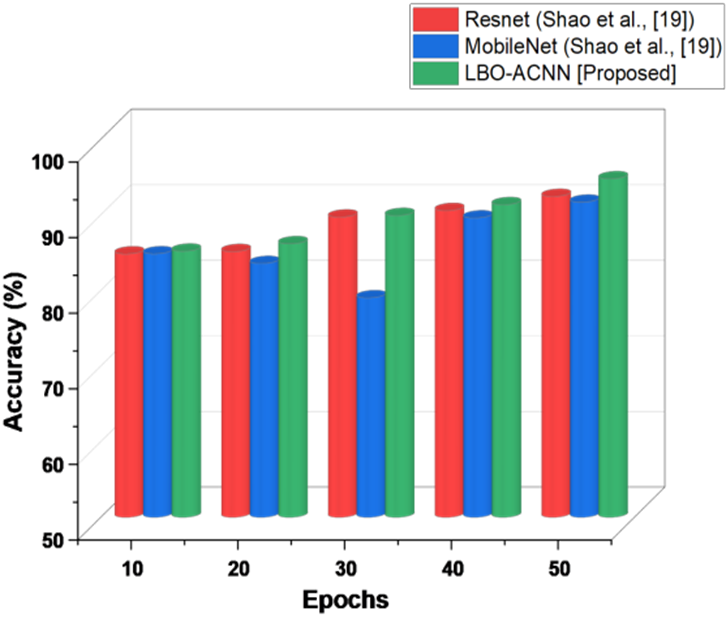

Accuracy measures the overall correctness of the model in identifying pig behaviors. The accuracy result is displayed in figure 5. 50 epochs were takento compare our suggested approach with the existing approaches. In the 10th epoch, our proposed method attained better accuracy results. Likewise, in the 50th epoch, our proposed approach continued to achieve better results. Suggested LBO-ACNN technique (94,78 %) compared to existing techniques: MobileNet (91,69 %), and ResNet (92,45 %). The suggested approach achieved a higher accuracy rate, demonstrating its ability to correctly classify the pig’s behavior. It demonstrates how our suggested approach outperforms the existing approaches.

Figure 5. Accuracy results

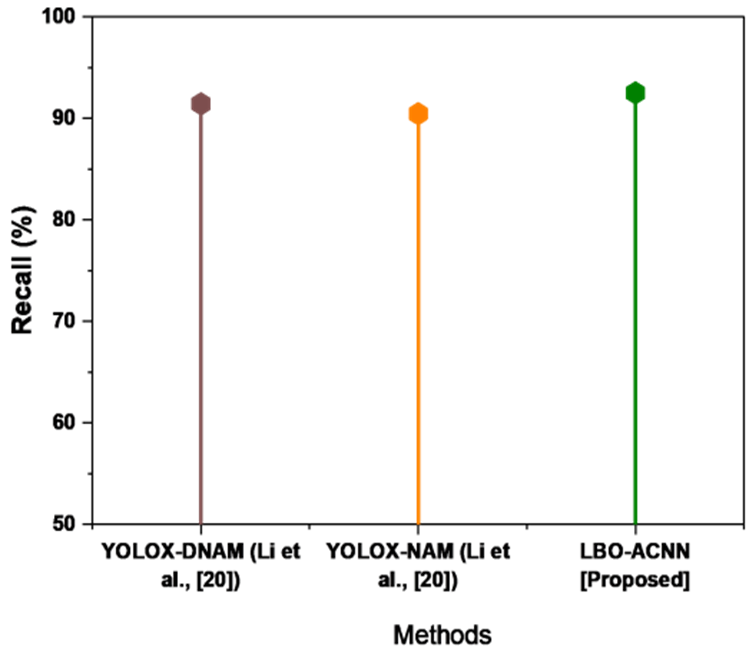

Recall reflects the system’s ability to identify all relevant occurrences of big behaviors. Figure 6 shows the recall results. The proposed LBO-ACNN method (92,51 %) was compared to existing approaches, likeYOLOX-NAM (90,45 %), and YOLOX-DNAM (91,43 %). The high recall score achieved by the suggested approach illustrates its effectiveness in detecting pig behavior without significant omissions. It shows that the suggested approach performed well as existing approaches.

Figure 6. Result of recall

DISCUSSION

Despite the significant improvements achieved in ResNet, MobileNet, YOLOX-DNAM, and YOLOX-NAM methods, serious limitations still remain. It has high-computational overheads in the ResNet method, thus making the method less suitable for real-time applications. In addition, MobileNet lacks accuracy under complex scenarios, while the YOLOX models degrade precision in dense scenes. ResNet MobileNet, YOLOX-DNAM, and YOLOX-NAMare effective approaches that are applied but not well enough when dealing with sophisticated object detection scenarios, especially if accuracy and computational efficiency are concerned.(19,20) To overcome these challenges a novel technique was proposed for optimizing both accuracy and processing efficiency to enhance the performance of a complex detection task.

CONCLUSIONS

This paper suggested a novelapproach for identifying pig behavior through the use of Ladybug Beetle-optimized Adaptive Convolutional Neural Networks (LBO-ACNN). This method can overcome the difficulties of manual observation by using deep learning techniques and the preprocessing of the collected data using the discrete wavelet transform to remove noise. The LBO-ACNN effectively identifies and categorizes different forms of aggression such as head-butting, ear-biting, and tail-biting with significant results in recall (92,51 %), accuracy (94,78 %), F1-score (93,31 %), and precision (94,17 %). This advanced system also improves the credibility of behavior documentation on large farms. This study enhances the care and livelihood of pigs by delivering rich data on the animal’s behavior and health concerns. The LBO-ACNN approach may struggle with varying lighting conditions and diverse pig environments, potentially affecting accuracy. Future research could integrate the system with real-time farm management tools and improve robustness against environmental variables to ensure consistent performance and broader applicability in different pig breeding scenarios.

BIBLIOGRAPHIC REFERENCES

1. Racewicz P, Ludwiczak A, Skrzypczak E, Składanowska-Baryza J, Biesiada H, Nowak T, Nowaczewski S, Zaborowicz M, Stanisz M, Ślósarz P. Welfare health and productivity in commercial pig herds. Animals. 2021 Apr 20;11(4):1176. https://doi.org/10.3390/ani11041176

2. Delsart M, Pol F, Dufour B, Rose N, Fablet C. Pig farming in alternative systems: strengths and challenges in terms of animal welfare, biosecurity, animal health and pork safety. Agriculture. 2020 Jul 2;10(7):261. https://doi.org/10.3390/agriculture10070261

3. Chen C, Zhu W, Norton T. Behaviour recognition of pigs and cattle: Journey from computer vision to deep learning. Computers and Electronics in Agriculture. 2021 Aug 1;187:106255. https://doi.org/10.1016/j.compag.2021.106255

4. Guo Q, Sun Y, Orsini C, Bolhuis JE, de Vlieg J, Bijma P, de With PH. Enhanced camera-based individual pig detection and tracking for smart pig farms. Computers and Electronics in Agriculture. 2023 Aug 1;211:108009. https://doi.org/10.1016/j.compag.2023.108009

5. Canario L, Bijma P, David I, Camerlink I, Martin A, Rauw WM, Flatres-Grall L, Zande LV, Turner SP, Larzul C, Rydhmer L. Prospects for the analysis and reduction of damaging behaviour in group-housed livestock, with application to pig breeding. Frontiers in Genetics. 2020 Dec 23;11:611073. https://doi.org/10.3389/fgene.2020.611073

6. Arulmozhi E, Bhujel A, Moon BE, Kim HT. The application of cameras in precision pig farming: An overview for swine-keeping professionals. Animals. 2021 Aug 9;11(8):2343. https://doi.org/10.3390/ani11082343

7. Reza MN, Ali MR, Kabir MS, Karim MR, Ahmed S, Kyoung H, Kim G, Chung SO. Thermal imaging and computer vision technologies for the enhancement of pig husbandry: a review. Journal of Animal Science and Technology. 2024 Jan;66(1):31. https://doi.org/10.5187%2Fjast.2024.e4

8. Riekert M, Klein A, Adrion F, Hoffmann C, Gallmann E. Automatically detecting pig position and posture by 2D camera imaging and deep learning. Computers and Electronics in Agriculture. 2020 Jul 1;174:105391. https://doi.org/10.1016/j.compag.2021.106213

9. Alameer A, Buijs S, O’Connell N, Dalton L, Larsen M, Pedersen L, Kyriazakis I. Automated detection and quantification of contact behaviour in pigs using deep learning. biosystems engineering. 2022 Dec 1;224:118-30. https://doi.org/10.1016/j.biosystemseng.2022.10.002

10. Witte JH, Gerberding J, Melching C, Gómez JM. Evaluation of deep learning instance segmentation models for pig precision livestock farming. InBusiness Information Systems 2021 Jul 2 (pp. 209-220). https://doi.org/10.52825/bis.v1i.59

11. Wutke M, Heinrich F, Das PP, Lange A, Gentz M, Traulsen I, Warns FK, Schmitt AO, Gültas M. Detecting animal contacts—A deep learning-based pig detection and tracking approach for the quantification of social contacts. Sensors. 2021 Nov 12;21(22):7512. https://doi.org/10.3390/s21227512

12. Alameer A, Kyriazakis I, Dalton HA, Miller AL, Bacardit J. Automatic recognition of feeding and foraging behaviour in pigs using deep learning. Biosystems engineering. 2020 Sep 1;197:91-104. https://doi.org/10.1016/j.biosystemseng.2020.06.013

13. Alameer A, Kyriazakis I, Bacardit J. Automated recognition of postures and drinking behaviour for the detection of compromised health in pigs. Scientific reports. 2020 Aug 12;10(1):13665. https://doi.org/10.1038/s41598-020-70688-6

14. Gan H, Ou M, Huang E, Xu C, Li S, Li J, Liu K, Xue Y. Automated detection and analysis of social behaviors among preweaning piglets using key point-based spatial and temporal features. Computers and Electronics in Agriculture. 2021 Sep 1;188:106357. https://doi.org/10.1016/j.compag.2021.106357

15. Hakansson F, Jensen DB. Automatic monitoring and detection of tail-biting behavior in groups of pigs using video-based deep learning methods. Frontiers in Veterinary Science. 2023 Jan 11;9:1099347. https://doi.org/10.3389/fvets.2022.1099347

16. Liu D, Oczak M, Maschat K, Baumgartner J, Pletzer B, He D, Norton T. A computer vision-based method for spatial-temporal action recognition of tail-biting behaviour in group-housed pigs. Biosystems Engineering. 2020 Jul 1;195:27-41. https://doi.org/10.1016/j.biosystemseng.2020.04.007

17. Chen C, Zhu W, Steibel J, Siegford J, Wurtz K, Han J, Norton T. Recognition of aggressive episodes of pigs based on convolutional neural network and long short-term memory. Computers and Electronics in Agriculture. 2020 Feb 1;169:105166. https://doi.org/10.1016/j.compag.2019.105166

18. Ji H, Yu J, Lao F, Zhuang Y, Wen Y, Teng G. Automatic position detection and posture recognition of grouped pigs based on deep learning. Agriculture. 2022 Aug 26;12(9):1314. https://doi.org/10.3390/agriculture12091314

19. Shao H, Pu J, Mu J. Pig-posture recognition based on computer vision: Dataset and exploration. Animals. 2021 Apr 30;11(5):1295. https://doi.org/10.3390/ani11051295

20. Li Y, Li J, Na T, Yang H. Detection of attack behaviour of pig based on deep learning. Systems Science & Control Engineering. 2023 Dec 31;11(1):2249934. https://doi.org/10.1080/21642583.2023.2249934

FINANCING

No financing.

CONFLICT OF INTEREST

The authors declare that there is no conflict of interest.

AUTHORSHIP CONTRIBUTION

Validation: Anton Louise De Ocampo, Rowell Hernandez.

Drafting - original draft: Xiaolu Zhang.

Writing - proofreading and editing: Jeffrey Sarmiento.

ANNEXES

|

KF |

Kalman Filter |

|

CNN |

Convolutional Neural Network |

|

NNV |

Non-Nutritive Visit |

|

DL |

Deep Learning |

|

RNN |

Recurrent Neural Network |

|

2D |

2-Dimensional |

|

1D-CNN |

1 Dimensional - Convolutional Neural Network |

|

IDWT |

Inverse Discrete Wavelet Transform |

|

3D |

3-Dimensional |

|

IDWT |

Inverse Discrete Wavelet Transform |

|

CNN-LSTM |

Convolutional Neural Network- Long Short Term Memory |